Quick Enquiry Form

Categories

- Agile and Scrum (226)

- BigData (36)

- Business Analysis (94)

- Cirtix Client Administration (54)

- Cisco (63)

- Cloud Technology (96)

- Cyber Security (56)

- Data Science and Business Intelligence (54)

- Developement Courses (53)

- DevOps (16)

- Digital Marketing (58)

- Emerging Technology (198)

- IT Service Management (76)

- Microsoft (54)

- Other (395)

- Project Management (502)

- Quality Management (143)

- salesforce (67)

Latest posts

5 Types of Feasibility Studies..

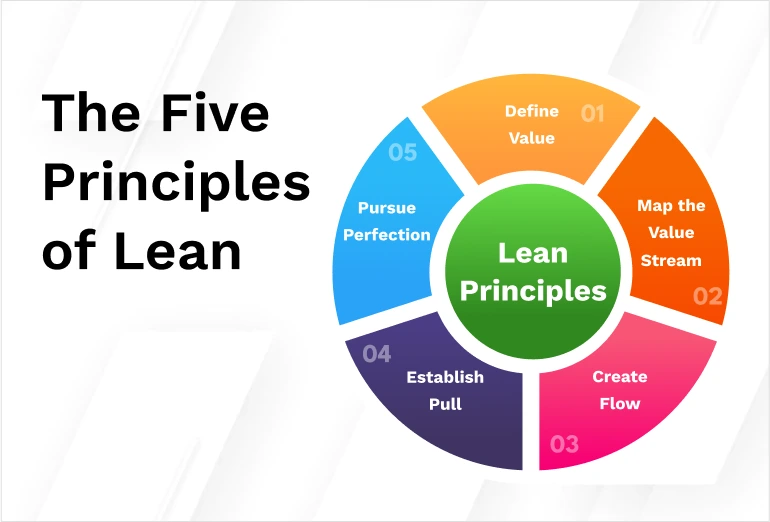

The 5 Core Principles of..

Top 11 Statistical Tools for..

Free Resources

Subscribe to Newsletter

COBIT® 5 Foundation and ITSM: Achieving Synergy Together

The convergence of COBIT® 5 Foundation and IT Service Management (ITSM) represents a dynamic and synergistic approach to enhancing organizational governance and optimizing IT service delivery. COBIT® 5, developed by the Information Systems Audit and Control Association (ISACA), is a globally recognized framework that provides a comprehensive set of principles, practices, and analytical tools for the effective governance and management of enterprise IT. On the other hand, IT Service Management focuses on aligning IT services with the needs of the business, emphasizing the delivery of high-quality services that meet customer expectations.

In recent years, organizations have increasingly recognized the need to integrate and harmonize their governance and service management practices. The collaboration between COBIT® 5 Foundation and ITSM offers a strategic alignment that enables businesses to achieve a more seamless and efficient IT environment. This integration is particularly crucial in today's complex and rapidly evolving digital landscape, where organizations must navigate challenges such as cybersecurity threats, regulatory compliance, and the ever-growing demand for innovative IT services.

The foundational principles of COBIT® 5, which include a focus on stakeholder value, risk management, and continual improvement, align seamlessly with the core objectives of IT Service Management. By combining these frameworks, organizations can establish a robust foundation for achieving not only compliance and risk mitigation but also the delivery of high-quality, customer-centric IT services. This synergy empowers businesses to streamline their processes, enhance decision-making, and foster a culture of collaboration across different functional areas.

Table of contents

-

Overview of COBIT® 5 Foundation

-

Essentials of IT Service Management (ITSM)

-

Governance and Risk Management Integration

-

Implementing the Synergy: Step-by-Step Guide

-

Measuring Success: Key Performance Indicators (KPIs)

-

Conclusion

Overview of COBIT® 5 Foundation

COBIT® 5 Foundation serves as a comprehensive framework developed by the Information Systems Audit and Control Association (ISACA) to guide organizations in achieving effective governance and management of information technology (IT). At its core, COBIT® (Control Objectives for Information and Related Technologies) emphasizes the importance of aligning IT processes with business objectives, ensuring that IT activities contribute directly to organizational success. The framework provides a set of principles, practices, and enablers that support enterprises in optimizing their IT capabilities while managing associated risks and ensuring the delivery of value to stakeholders.

The COBIT® 5 Foundation framework is structured around five key principles, each contributing to the achievement of effective IT governance. These principles include meeting stakeholder needs, covering the enterprise end-to-end, applying a single integrated framework, enabling a holistic approach, and separating governance from management. Together, these principles guide organizations in establishing a governance and management system that is both efficient and adaptable to the evolving landscape of IT and business.

COBIT® 5 Foundation offers a structured and comprehensive approach to IT governance, emphasizing stakeholder value, principled practices, and adaptability to the ever-changing IT landscape. By leveraging this framework, organizations can establish a robust foundation for effective governance, risk management, and the continuous improvement of their IT processes, ultimately contributing to enhanced overall business performance.

Essentials of IT Service Management (ITSM)

Essentials of IT Service Management (ITSM) form the foundational principles and practices that organizations adopt to ensure the effective delivery and support of IT services aligned with business objectives. At its core, ITSM revolves around meeting the needs of users, optimizing service quality, and enhancing overall organizational efficiency. The following components encapsulate the key essentials of ITSM:

Service Strategy constitutes a critical pillar of ITSM, involving the development of a comprehensive strategy aligned with business goals. This phase entails understanding customer needs, defining service offerings, and formulating a strategic roadmap for delivering IT services that contribute directly to the success of the organization.

In the Service Design phase, the focus shifts to creating service solutions that meet the requirements outlined in the service strategy. This encompasses designing processes, technologies, and other essential elements necessary for the efficient and effective delivery of high-quality services. Service design ensures that IT services are not only functional but also meet the broader objectives of the organization.

Service Transition is a crucial aspect of ITSM that involves planning and managing changes to services and service management processes. This phase ensures a smooth transition of new or modified services into the operational environment while minimizing disruptions. It encompasses activities such as change management, release and deployment management, and knowledge management.

Continual Service Improvement (CSI) serves as a guiding principle within ITSM, emphasizing the necessity of ongoing enhancement and optimization of services and processes. Through regular assessments, feedback mechanisms, and a commitment to learning from experiences, organizations practicing ITSM can identify areas for improvement and ensure a cycle of continuous enhancement in service delivery and operational efficiency. Together, these essentials form a holistic framework for organizations seeking to align their IT services with business goals, enhance customer satisfaction, and adapt to the dynamic landscape of technology and user expectations.

Governance and Risk Management Integration

In the realm of governance and risk management integration, organizations benefit from fostering a culture of risk awareness and accountability. This cultural shift encourages employees at all levels to recognize and report risks, fostering a collective responsibility for risk mitigation. By incorporating risk considerations into the organization's values and day-to-day operations, companies can create a more proactive and resilient stance toward potential challenges.

Integration also involves the development and implementation of robust risk management frameworks that seamlessly align with governance structures. This includes defining clear roles and responsibilities for risk management at various levels of the organization, establishing effective communication channels for sharing risk information, and integrating risk assessments into strategic planning processes. Such frameworks not only identify potential risks but also provide a structured approach to managing and monitoring these risks over time.

Continuous learning and improvement are inherent components of successful integration. Organizations should conduct periodic reviews and audits to evaluate the effectiveness of their integrated governance and risk management approach. Lessons learned from incidents and successes should be incorporated into future strategies, fostering a dynamic and adaptive governance structure that evolves in tandem with the changing risk landscape.

Governance and risk management integration is a multifaceted and ongoing process that requires a commitment to cultural change, technological innovation, and continuous improvement. By weaving risk considerations into the fabric of governance structures, organizations can enhance their resilience, make more informed decisions, and navigate an increasingly complex and uncertain business environment.

Implementing the Synergy: Step-by-Step Guide

Implementing the synergy between COBIT® 5 Foundation and IT Service Management (ITSM) is a strategic imperative that demands a methodical and well-coordinated approach. Commencing with a thorough assessment of the current state of IT governance and service management, organizations gain a foundational understanding of existing processes and their maturity. This assessment sets the stage for subsequent integration efforts, providing valuable insights into the organization's strengths and areas for improvement.

Precise definition of integration objectives and scope follows, as organizations articulate the desired outcomes and benefits to be derived from the amalgamation of COBIT® 5 Foundation and ITSM. A clear scope ensures that efforts remain focused on specific goals, preventing potential deviations and ensuring a streamlined implementation process. Stakeholder engagement becomes paramount, involving key representatives from IT and business units to gather diverse perspectives and ensure alignment with overarching organizational goals. This collaborative engagement fosters buy-in and support crucial for the successful integration.

Key performance indicators (KPIs) are established to measure the success of the integration, encompassing governance effectiveness, service delivery efficiency, and overall alignment with organizational objectives. Regular monitoring and assessment of these indicators offer valuable insights into the performance and impact of the integrated framework, guiding ongoing improvements.

Implementation efforts extend to training programs and change management initiatives, ensuring that teams are equipped with the necessary knowledge and skills to operate within the integrated framework. Pilot programs may be initiated in specific departments or business units to test the integrated framework in a controlled environment, allowing for the identification and resolution of potential challenges before full-scale deployment. Continuous monitoring and evaluation mechanisms are established to assess the ongoing effectiveness of the integrated COBIT® 5 Foundation and ITSM framework, incorporating feedback from users and stakeholders to drive necessary adjustments.

Cultivating a culture of continuous improvement is emphasized, encouraging teams to identify opportunities for enhancement and establishing mechanisms for regular reviews and refinements to the integrated framework. This adaptive approach ensures the sustained success of the integration, aligning IT services seamlessly with business objectives while fostering a dynamic and resilient organizational environment. In conclusion, this step-by-step guide serves as a comprehensive and structured approach to implementing the synergy between COBIT® 5 Foundation and IT Service Management, ultimately enhancing governance practices and optimizing the delivery of IT services.

Measuring Success: Key Performance Indicators (KPIs)

Measuring the success of the integration between COBIT® 5 Foundation and IT Service Management (ITSM) relies on a thoughtful selection of Key Performance Indicators (KPIs) that encompass various aspects critical to the alignment of governance and service delivery. One pivotal category of KPIs revolves around strategic alignment, evaluating how effectively the integrated framework contributes to organizational objectives. These indicators provide a holistic view of whether the governance practices and IT service delivery align with and support the broader strategic vision of the organization, emphasizing the symbiotic relationship between IT initiatives and overall business goals.

Governance effectiveness serves as another crucial dimension for assessing success. KPIs within this category focus on evaluating the efficiency and efficacy of governance processes. Metrics such as the speed of decision-making, compliance levels, and the responsiveness of governance structures offer insights into how well the organization is managing its IT resources and risks. These indicators serve as a barometer for the overall health and effectiveness of the governance component within the integrated framework.

User satisfaction and experience KPIs form a vital component, providing insights into the impact of the integrated framework on end-users. Metrics such as user satisfaction surveys, feedback on service quality, and user adoption rates offer a qualitative assessment of how well the integrated approach meets stakeholder expectations. These indicators are crucial for ensuring a positive and productive user experience, as the success of the integration is ultimately measured by the satisfaction and engagement of the end-users.

A continuous improvement mindset is integral to sustained success, and corresponding KPIs focus on the organization's ability to adapt and refine the integrated framework over time. Metrics related to the frequency and effectiveness of updates and refinements, as well as the organization's agility in responding to emerging challenges, highlight the dynamic nature of governance and service management integration. Success, in this context, is not merely a destination but an ongoing journey of refinement and adaptation to ensure that governance and service management practices evolve in tandem with organizational goals and the evolving IT landscape.

How to obtain COBIT® 5 Foundation certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

Conclusion

In conclusion, the pursuit of synergy between COBIT® 5 Foundation and IT Service Management (ITSM) stands as a transformative endeavor, poised to elevate organizational effectiveness in IT governance and service delivery. This convergence represents a strategic alignment that, when carefully implemented, holds the promise of harmonizing IT processes, optimizing risk management, and enhancing the delivery of IT services in line with business objectives.

The step-by-step guide provided for achieving this synergy emphasizes the importance of a systematic and collaborative approach. From the initial assessment of the current state to the establishment of key performance indicators (KPIs) for ongoing measurement, the guide offers a structured pathway for organizations to navigate the integration process. Defining clear objectives, engaging stakeholders, and fostering a culture of continuous improvement emerge as pivotal elements, ensuring that the integration is purposeful, inclusive, and adaptable to the evolving landscape of IT and business dynamics.

The seamless alignment of COBIT® 5 Foundation and ITSM processes contributes not only to operational efficiency but also to a more resilient and responsive IT ecosystem. By integrating governance and service management, organizations are better equipped to meet the ever-changing needs of stakeholders, enhance risk mitigation strategies, and cultivate a customer-centric approach to IT services.

As organizations embark on this journey of achieving synergy between COBIT® 5 Foundation and ITSM, the ultimate goal is to create a dynamic and adaptive IT environment. This integration is not a static achievement but a continuous process of refinement and optimization. Success is measured not just by the integration itself but by the sustained ability to deliver value, align with strategic goals, and proactively respond to the challenges and opportunities inherent in the digital landscape. In essence, achieving synergy between COBIT® 5 Foundation and ITSM is a strategic imperative that positions organizations to thrive in an era where effective governance and agile service management are essential for sustained success.

Read More

The convergence of COBIT® 5 Foundation and IT Service Management (ITSM) represents a dynamic and synergistic approach to enhancing organizational governance and optimizing IT service delivery. COBIT® 5, developed by the Information Systems Audit and Control Association (ISACA), is a globally recognized framework that provides a comprehensive set of principles, practices, and analytical tools for the effective governance and management of enterprise IT. On the other hand, IT Service Management focuses on aligning IT services with the needs of the business, emphasizing the delivery of high-quality services that meet customer expectations.

In recent years, organizations have increasingly recognized the need to integrate and harmonize their governance and service management practices. The collaboration between COBIT® 5 Foundation and ITSM offers a strategic alignment that enables businesses to achieve a more seamless and efficient IT environment. This integration is particularly crucial in today's complex and rapidly evolving digital landscape, where organizations must navigate challenges such as cybersecurity threats, regulatory compliance, and the ever-growing demand for innovative IT services.

The foundational principles of COBIT® 5, which include a focus on stakeholder value, risk management, and continual improvement, align seamlessly with the core objectives of IT Service Management. By combining these frameworks, organizations can establish a robust foundation for achieving not only compliance and risk mitigation but also the delivery of high-quality, customer-centric IT services. This synergy empowers businesses to streamline their processes, enhance decision-making, and foster a culture of collaboration across different functional areas.

Table of contents

-

Overview of COBIT® 5 Foundation

-

Essentials of IT Service Management (ITSM)

-

Governance and Risk Management Integration

-

Implementing the Synergy: Step-by-Step Guide

-

Measuring Success: Key Performance Indicators (KPIs)

-

Conclusion

Overview of COBIT® 5 Foundation

COBIT® 5 Foundation serves as a comprehensive framework developed by the Information Systems Audit and Control Association (ISACA) to guide organizations in achieving effective governance and management of information technology (IT). At its core, COBIT® (Control Objectives for Information and Related Technologies) emphasizes the importance of aligning IT processes with business objectives, ensuring that IT activities contribute directly to organizational success. The framework provides a set of principles, practices, and enablers that support enterprises in optimizing their IT capabilities while managing associated risks and ensuring the delivery of value to stakeholders.

The COBIT® 5 Foundation framework is structured around five key principles, each contributing to the achievement of effective IT governance. These principles include meeting stakeholder needs, covering the enterprise end-to-end, applying a single integrated framework, enabling a holistic approach, and separating governance from management. Together, these principles guide organizations in establishing a governance and management system that is both efficient and adaptable to the evolving landscape of IT and business.

COBIT® 5 Foundation offers a structured and comprehensive approach to IT governance, emphasizing stakeholder value, principled practices, and adaptability to the ever-changing IT landscape. By leveraging this framework, organizations can establish a robust foundation for effective governance, risk management, and the continuous improvement of their IT processes, ultimately contributing to enhanced overall business performance.

Essentials of IT Service Management (ITSM)

Essentials of IT Service Management (ITSM) form the foundational principles and practices that organizations adopt to ensure the effective delivery and support of IT services aligned with business objectives. At its core, ITSM revolves around meeting the needs of users, optimizing service quality, and enhancing overall organizational efficiency. The following components encapsulate the key essentials of ITSM:

Service Strategy constitutes a critical pillar of ITSM, involving the development of a comprehensive strategy aligned with business goals. This phase entails understanding customer needs, defining service offerings, and formulating a strategic roadmap for delivering IT services that contribute directly to the success of the organization.

In the Service Design phase, the focus shifts to creating service solutions that meet the requirements outlined in the service strategy. This encompasses designing processes, technologies, and other essential elements necessary for the efficient and effective delivery of high-quality services. Service design ensures that IT services are not only functional but also meet the broader objectives of the organization.

Service Transition is a crucial aspect of ITSM that involves planning and managing changes to services and service management processes. This phase ensures a smooth transition of new or modified services into the operational environment while minimizing disruptions. It encompasses activities such as change management, release and deployment management, and knowledge management.

Continual Service Improvement (CSI) serves as a guiding principle within ITSM, emphasizing the necessity of ongoing enhancement and optimization of services and processes. Through regular assessments, feedback mechanisms, and a commitment to learning from experiences, organizations practicing ITSM can identify areas for improvement and ensure a cycle of continuous enhancement in service delivery and operational efficiency. Together, these essentials form a holistic framework for organizations seeking to align their IT services with business goals, enhance customer satisfaction, and adapt to the dynamic landscape of technology and user expectations.

Governance and Risk Management Integration

In the realm of governance and risk management integration, organizations benefit from fostering a culture of risk awareness and accountability. This cultural shift encourages employees at all levels to recognize and report risks, fostering a collective responsibility for risk mitigation. By incorporating risk considerations into the organization's values and day-to-day operations, companies can create a more proactive and resilient stance toward potential challenges.

Integration also involves the development and implementation of robust risk management frameworks that seamlessly align with governance structures. This includes defining clear roles and responsibilities for risk management at various levels of the organization, establishing effective communication channels for sharing risk information, and integrating risk assessments into strategic planning processes. Such frameworks not only identify potential risks but also provide a structured approach to managing and monitoring these risks over time.

Continuous learning and improvement are inherent components of successful integration. Organizations should conduct periodic reviews and audits to evaluate the effectiveness of their integrated governance and risk management approach. Lessons learned from incidents and successes should be incorporated into future strategies, fostering a dynamic and adaptive governance structure that evolves in tandem with the changing risk landscape.

Governance and risk management integration is a multifaceted and ongoing process that requires a commitment to cultural change, technological innovation, and continuous improvement. By weaving risk considerations into the fabric of governance structures, organizations can enhance their resilience, make more informed decisions, and navigate an increasingly complex and uncertain business environment.

Implementing the Synergy: Step-by-Step Guide

Implementing the synergy between COBIT® 5 Foundation and IT Service Management (ITSM) is a strategic imperative that demands a methodical and well-coordinated approach. Commencing with a thorough assessment of the current state of IT governance and service management, organizations gain a foundational understanding of existing processes and their maturity. This assessment sets the stage for subsequent integration efforts, providing valuable insights into the organization's strengths and areas for improvement.

Precise definition of integration objectives and scope follows, as organizations articulate the desired outcomes and benefits to be derived from the amalgamation of COBIT® 5 Foundation and ITSM. A clear scope ensures that efforts remain focused on specific goals, preventing potential deviations and ensuring a streamlined implementation process. Stakeholder engagement becomes paramount, involving key representatives from IT and business units to gather diverse perspectives and ensure alignment with overarching organizational goals. This collaborative engagement fosters buy-in and support crucial for the successful integration.

Key performance indicators (KPIs) are established to measure the success of the integration, encompassing governance effectiveness, service delivery efficiency, and overall alignment with organizational objectives. Regular monitoring and assessment of these indicators offer valuable insights into the performance and impact of the integrated framework, guiding ongoing improvements.

Implementation efforts extend to training programs and change management initiatives, ensuring that teams are equipped with the necessary knowledge and skills to operate within the integrated framework. Pilot programs may be initiated in specific departments or business units to test the integrated framework in a controlled environment, allowing for the identification and resolution of potential challenges before full-scale deployment. Continuous monitoring and evaluation mechanisms are established to assess the ongoing effectiveness of the integrated COBIT® 5 Foundation and ITSM framework, incorporating feedback from users and stakeholders to drive necessary adjustments.

Cultivating a culture of continuous improvement is emphasized, encouraging teams to identify opportunities for enhancement and establishing mechanisms for regular reviews and refinements to the integrated framework. This adaptive approach ensures the sustained success of the integration, aligning IT services seamlessly with business objectives while fostering a dynamic and resilient organizational environment. In conclusion, this step-by-step guide serves as a comprehensive and structured approach to implementing the synergy between COBIT® 5 Foundation and IT Service Management, ultimately enhancing governance practices and optimizing the delivery of IT services.

Measuring Success: Key Performance Indicators (KPIs)

Measuring the success of the integration between COBIT® 5 Foundation and IT Service Management (ITSM) relies on a thoughtful selection of Key Performance Indicators (KPIs) that encompass various aspects critical to the alignment of governance and service delivery. One pivotal category of KPIs revolves around strategic alignment, evaluating how effectively the integrated framework contributes to organizational objectives. These indicators provide a holistic view of whether the governance practices and IT service delivery align with and support the broader strategic vision of the organization, emphasizing the symbiotic relationship between IT initiatives and overall business goals.

Governance effectiveness serves as another crucial dimension for assessing success. KPIs within this category focus on evaluating the efficiency and efficacy of governance processes. Metrics such as the speed of decision-making, compliance levels, and the responsiveness of governance structures offer insights into how well the organization is managing its IT resources and risks. These indicators serve as a barometer for the overall health and effectiveness of the governance component within the integrated framework.

User satisfaction and experience KPIs form a vital component, providing insights into the impact of the integrated framework on end-users. Metrics such as user satisfaction surveys, feedback on service quality, and user adoption rates offer a qualitative assessment of how well the integrated approach meets stakeholder expectations. These indicators are crucial for ensuring a positive and productive user experience, as the success of the integration is ultimately measured by the satisfaction and engagement of the end-users.

A continuous improvement mindset is integral to sustained success, and corresponding KPIs focus on the organization's ability to adapt and refine the integrated framework over time. Metrics related to the frequency and effectiveness of updates and refinements, as well as the organization's agility in responding to emerging challenges, highlight the dynamic nature of governance and service management integration. Success, in this context, is not merely a destination but an ongoing journey of refinement and adaptation to ensure that governance and service management practices evolve in tandem with organizational goals and the evolving IT landscape.

How to obtain COBIT® 5 Foundation certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

Conclusion

In conclusion, the pursuit of synergy between COBIT® 5 Foundation and IT Service Management (ITSM) stands as a transformative endeavor, poised to elevate organizational effectiveness in IT governance and service delivery. This convergence represents a strategic alignment that, when carefully implemented, holds the promise of harmonizing IT processes, optimizing risk management, and enhancing the delivery of IT services in line with business objectives.

The step-by-step guide provided for achieving this synergy emphasizes the importance of a systematic and collaborative approach. From the initial assessment of the current state to the establishment of key performance indicators (KPIs) for ongoing measurement, the guide offers a structured pathway for organizations to navigate the integration process. Defining clear objectives, engaging stakeholders, and fostering a culture of continuous improvement emerge as pivotal elements, ensuring that the integration is purposeful, inclusive, and adaptable to the evolving landscape of IT and business dynamics.

The seamless alignment of COBIT® 5 Foundation and ITSM processes contributes not only to operational efficiency but also to a more resilient and responsive IT ecosystem. By integrating governance and service management, organizations are better equipped to meet the ever-changing needs of stakeholders, enhance risk mitigation strategies, and cultivate a customer-centric approach to IT services.

As organizations embark on this journey of achieving synergy between COBIT® 5 Foundation and ITSM, the ultimate goal is to create a dynamic and adaptive IT environment. This integration is not a static achievement but a continuous process of refinement and optimization. Success is measured not just by the integration itself but by the sustained ability to deliver value, align with strategic goals, and proactively respond to the challenges and opportunities inherent in the digital landscape. In essence, achieving synergy between COBIT® 5 Foundation and ITSM is a strategic imperative that positions organizations to thrive in an era where effective governance and agile service management are essential for sustained success.

Mastering Lightning Web Components: A Guide for Admins.

"Mastering Lightning Web Components: A Guide for Salesforce Administrators and App Builders" is a comprehensive and authoritative resource designed to empower individuals within the Salesforce ecosystem to harness the full potential of Lightning Web Components (LWC). In the rapidly evolving landscape of Salesforce development, LWC has emerged as a powerful and modern framework for building dynamic and responsive user interfaces. This book is tailored specifically for Salesforce administrators and app builders, providing them with the knowledge and skills needed to elevate their capabilities and streamline the development process.

The introduction of Lightning Web Components has marked a paradigm shift in how applications are built on the Salesforce platform. With a focus on reusability, performance, and enhanced developer productivity, LWC enables users to create lightning-fast, scalable, and modular applications. "Mastering Lightning Web Components" serves as a roadmap for navigating this transformative technology, offering clear and practical guidance to navigate the intricacies of LWC development.

Written by seasoned experts in the Salesforce ecosystem, this guide strikes a balance between technical depth and accessibility, making it an ideal companion for both newcomers and experienced professionals seeking to deepen their understanding of Lightning Web Components. The book not only covers the fundamentals of LWC but also delves into advanced topics, best practices, and real-world scenarios, providing readers with a holistic understanding of how to leverage LWC to meet the unique needs of their Salesforce projects.

Whether you are a Salesforce administrator looking to enhance your declarative skills or an app builder aiming to extend your development prowess, "Mastering Lightning Web Components" equips you with the knowledge and insights needed to thrive in the dynamic world of Salesforce application development. From building custom components to optimizing performance and ensuring seamless integration with existing workflows, this guide is your key to unlocking the full potential of Lightning Web Components within the Salesforce ecosystem.

Table of contents

-

Foundations of Lightning Web Components (LWC)

-

Declarative Development with LWC for Administrators

-

Advanced LWC Development for App Builders

-

Optimizing Performance in Lightning Web Components

-

Integration and Extensibility in LWC

-

Conclusion

Foundations of Lightning Web Components (LWC)

In the realm of Salesforce development, a solid understanding of the foundations of Lightning Web Components (LWC) is essential for any administrator or app builder seeking to harness the full potential of this modern framework. At its core, LWC introduces a component-based architecture that leverages web standards to enable the creation of dynamic and responsive user interfaces. This section of the guide delves into the fundamental concepts that underpin LWC, providing readers with a comprehensive overview of the building blocks that define this framework.

At the heart of LWC lie key elements such as templates, JavaScript classes, and decorators. The guide explores the role of templates in defining the structure of components, allowing developers to seamlessly blend HTML with dynamic data rendering. JavaScript classes, on the other hand, provide the logic behind these components, facilitating the creation of interactive and data-driven user experiences. Decorators act as the glue that binds it all together, allowing developers to enhance their components with metadata that defines specific behaviors.

Understanding how LWC fits into the broader Salesforce Lightning framework is crucial for practitioners looking to navigate the Salesforce ecosystem effectively. This section elucidates the relationships between Lightning Web Components and other Lightning technologies, shedding light on how LWC integrates seamlessly into the broader Lightning Experience. By grasping these foundational concepts, readers are equipped with the knowledge needed to embark on a journey of effective and efficient Lightning Web Component development.

Declarative Development with LWC for Administrators

In the rapidly evolving landscape of Salesforce, administrators play a pivotal role in shaping user experiences and streamlining processes. This section of the guide focuses on how administrators can leverage the power of Lightning Web Components (LWC) for declarative development, empowering them to create sophisticated user interfaces without delving into extensive code.

One key aspect explored is the integration of LWC components into the Lightning App Builder and Community Builder interfaces. Administrators gain insights into the seamless incorporation of custom Lightning Web Components into Lightning pages, providing a visual and intuitive approach to enhancing user interfaces. Through a step-by-step exploration, administrators learn how to harness the declarative capabilities of these builders to tailor user experiences to specific business requirements.

Furthermore, the guide delves into best practices for designing and configuring Lightning pages with LWC components. Administrators gain an understanding of the declarative tools at their disposal, such as page layouts, dynamic forms, and component properties. This knowledge enables them to efficiently customize user interfaces, ensuring a cohesive and user-friendly experience for their Salesforce users.

By the end of this section, administrators not only grasp the essentials of declarative development with LWC but also acquire the skills to implement powerful and customized solutions within the Salesforce platform. The combination of visual tools and Lightning Web Components empowers administrators to take their declarative development capabilities to new heights, providing a bridge between code-driven development and intuitive, user-friendly interfaces.

Advanced LWC Development for App Builders

As app builders strive for innovation and sophistication in their Salesforce applications, a mastery of advanced Lightning Web Components (LWC) development becomes imperative. This section of the guide immerses app builders in the intricacies of LWC, going beyond the basics to explore techniques that enhance scalability, maintainability, and overall development efficiency.

The journey into advanced LWC development begins with a focus on creating reusable components and modules. App builders discover how to design components that transcend individual use cases, fostering modularity and extensibility in their applications. By understanding the principles of component reusability, builders gain the tools to construct scalable and maintainable solutions that adapt to evolving business needs.

A significant emphasis is placed on navigating the complexities of data handling in LWC. This includes in-depth exploration of asynchronous operations, efficient client-side caching, and strategic server-side communication. App builders learn how to optimize data flow within their applications, ensuring responsiveness and minimizing latency for an optimal user experience.

The guide also delves into advanced topics such as event-driven architectures, enabling app builders to design applications that respond dynamically to user interactions and external events. Through real-world examples and best practices, builders gain insights into leveraging custom events, understanding the publish-subscribe pattern, and orchestrating seamless communication between Lightning Web Components.

App builders emerge with a heightened proficiency in advanced LWC development. Armed with the knowledge to create modular, efficient, and responsive applications, they are well-equipped to tackle the complexities of modern Salesforce development, delivering solutions that not only meet but exceed the expectations of users and stakeholders.

Optimizing Performance in Lightning Web Components

Performance optimization is a critical facet of Lightning Web Components (LWC) development, ensuring that Salesforce applications deliver a seamless and responsive user experience. This section of the guide delves into the strategies and techniques employed to maximize the efficiency of LWC applications, covering aspects from rendering speed to minimizing server calls.

The exploration begins with a focus on efficient rendering techniques, where developers gain insights into best practices for structuring components to enhance page load times. This includes understanding the lifecycle hooks in LWC, optimizing template structures, and employing techniques like lazy loading to prioritize critical content. By mastering these rendering optimizations, developers can significantly enhance the perceived performance of their applications.

A significant portion of the section is dedicated to minimizing Apex calls and optimizing server-side communication. Developers learn how to design LWC components that communicate efficiently with the server, reducing the payload and minimizing latency. Techniques such as caching and asynchronous operations are explored in detail, allowing developers to strike a balance between data freshness and performance.

Developers are equipped with a robust set of tools and techniques to optimize the performance of their Lightning Web Components. Whether it's through rendering optimizations, efficient server communication, or client-side strategies, this knowledge empowers developers to deliver Lightning-fast applications that meet the high standards of modern user expectations in the Salesforce

Integration and Extensibility in LWC

In the ever-evolving landscape of Salesforce development, the ability to seamlessly integrate Lightning Web Components (LWC) with various Salesforce technologies and extend their functionality is crucial for creating versatile and interconnected applications. This section of the guide provides a comprehensive exploration of the strategies and techniques that developers can employ to achieve robust integration and enhance the extensibility of their LWC solutions.

The integration journey begins by delving into the role of Apex controllers in connecting LWC components with the Salesforce server. Developers gain insights into leveraging server-side logic for data retrieval, manipulation, and other operations, fostering a cohesive and efficient data flow within their applications. Additionally, the guide explores the nuances of integrating LWC components into existing Lightning components, offering a roadmap for creating a unified user experience across diverse Salesforce functionalities.

Extensibility, a key aspect of effective application development, is addressed through a deep dive into the use of custom events in LWC. Developers learn how to implement event-driven architectures, enabling seamless communication and collaboration between components. This section also covers advanced topics such as dynamic component creation and composition, allowing developers to design flexible and adaptable solutions that can be easily extended to meet evolving business requirements.

This section equips developers with a holistic understanding of integration and extensibility in LWC, fostering the creation of applications that seamlessly connect within the Salesforce ecosystem while providing the flexibility to adapt and scale to the unique demands of diverse business landscapes. By mastering these integration and extensibility strategies, developers unlock the full potential of Lightning Web Components in building interconnected, dynamic, and future-proof Salesforce solutions.

How to obtain Salesforce Administrators and App Builders certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

Conclusion

In the journey of "Mastering Lightning Web Components: A Guide for Salesforce Administrators and App Builders," we have traversed the intricate landscape of LWC development, covering foundational concepts, declarative development, advanced techniques, performance optimization, and seamless integration. As we conclude this guide, it is evident that Lightning Web Components stand at the forefront of modern Salesforce development, offering a powerful and flexible framework for crafting dynamic and responsive applications.

Throughout the guide, administrators have gained insights into declarative development, learning how to wield the visual tools of Lightning App Builder and Community Builder to enhance user interfaces. App builders have delved into advanced LWC development, acquiring the skills to create modular, scalable, and innovative applications that transcend the basics. Developers have explored performance optimization strategies, ensuring their applications meet the high standards of responsiveness expected in the digital era. Integration and extensibility have been demystified, empowering developers to seamlessly connect LWC components within the Salesforce ecosystem and extend their functionality to meet diverse business needs.

As the Salesforce ecosystem continues to evolve, embracing Lightning Web Components as a cornerstone of development opens doors to innovation and agility. The guide's comprehensive coverage equips individuals to not only meet but exceed the expectations of users, stakeholders, and the dynamic Salesforce platform itself. Whether crafting intuitive user interfaces, optimizing for performance, or seamlessly integrating with external systems, the skills acquired in this guide are a testament to the empowerment that comes with mastering Lightning Web Components in the ever-expanding Salesforce universe.

Read More

"Mastering Lightning Web Components: A Guide for Salesforce Administrators and App Builders" is a comprehensive and authoritative resource designed to empower individuals within the Salesforce ecosystem to harness the full potential of Lightning Web Components (LWC). In the rapidly evolving landscape of Salesforce development, LWC has emerged as a powerful and modern framework for building dynamic and responsive user interfaces. This book is tailored specifically for Salesforce administrators and app builders, providing them with the knowledge and skills needed to elevate their capabilities and streamline the development process.

The introduction of Lightning Web Components has marked a paradigm shift in how applications are built on the Salesforce platform. With a focus on reusability, performance, and enhanced developer productivity, LWC enables users to create lightning-fast, scalable, and modular applications. "Mastering Lightning Web Components" serves as a roadmap for navigating this transformative technology, offering clear and practical guidance to navigate the intricacies of LWC development.

Written by seasoned experts in the Salesforce ecosystem, this guide strikes a balance between technical depth and accessibility, making it an ideal companion for both newcomers and experienced professionals seeking to deepen their understanding of Lightning Web Components. The book not only covers the fundamentals of LWC but also delves into advanced topics, best practices, and real-world scenarios, providing readers with a holistic understanding of how to leverage LWC to meet the unique needs of their Salesforce projects.

Whether you are a Salesforce administrator looking to enhance your declarative skills or an app builder aiming to extend your development prowess, "Mastering Lightning Web Components" equips you with the knowledge and insights needed to thrive in the dynamic world of Salesforce application development. From building custom components to optimizing performance and ensuring seamless integration with existing workflows, this guide is your key to unlocking the full potential of Lightning Web Components within the Salesforce ecosystem.

Table of contents

-

Foundations of Lightning Web Components (LWC)

-

Declarative Development with LWC for Administrators

-

Advanced LWC Development for App Builders

-

Optimizing Performance in Lightning Web Components

-

Integration and Extensibility in LWC

-

Conclusion

Foundations of Lightning Web Components (LWC)

In the realm of Salesforce development, a solid understanding of the foundations of Lightning Web Components (LWC) is essential for any administrator or app builder seeking to harness the full potential of this modern framework. At its core, LWC introduces a component-based architecture that leverages web standards to enable the creation of dynamic and responsive user interfaces. This section of the guide delves into the fundamental concepts that underpin LWC, providing readers with a comprehensive overview of the building blocks that define this framework.

At the heart of LWC lie key elements such as templates, JavaScript classes, and decorators. The guide explores the role of templates in defining the structure of components, allowing developers to seamlessly blend HTML with dynamic data rendering. JavaScript classes, on the other hand, provide the logic behind these components, facilitating the creation of interactive and data-driven user experiences. Decorators act as the glue that binds it all together, allowing developers to enhance their components with metadata that defines specific behaviors.

Understanding how LWC fits into the broader Salesforce Lightning framework is crucial for practitioners looking to navigate the Salesforce ecosystem effectively. This section elucidates the relationships between Lightning Web Components and other Lightning technologies, shedding light on how LWC integrates seamlessly into the broader Lightning Experience. By grasping these foundational concepts, readers are equipped with the knowledge needed to embark on a journey of effective and efficient Lightning Web Component development.

Declarative Development with LWC for Administrators

In the rapidly evolving landscape of Salesforce, administrators play a pivotal role in shaping user experiences and streamlining processes. This section of the guide focuses on how administrators can leverage the power of Lightning Web Components (LWC) for declarative development, empowering them to create sophisticated user interfaces without delving into extensive code.

One key aspect explored is the integration of LWC components into the Lightning App Builder and Community Builder interfaces. Administrators gain insights into the seamless incorporation of custom Lightning Web Components into Lightning pages, providing a visual and intuitive approach to enhancing user interfaces. Through a step-by-step exploration, administrators learn how to harness the declarative capabilities of these builders to tailor user experiences to specific business requirements.

Furthermore, the guide delves into best practices for designing and configuring Lightning pages with LWC components. Administrators gain an understanding of the declarative tools at their disposal, such as page layouts, dynamic forms, and component properties. This knowledge enables them to efficiently customize user interfaces, ensuring a cohesive and user-friendly experience for their Salesforce users.

By the end of this section, administrators not only grasp the essentials of declarative development with LWC but also acquire the skills to implement powerful and customized solutions within the Salesforce platform. The combination of visual tools and Lightning Web Components empowers administrators to take their declarative development capabilities to new heights, providing a bridge between code-driven development and intuitive, user-friendly interfaces.

Advanced LWC Development for App Builders

As app builders strive for innovation and sophistication in their Salesforce applications, a mastery of advanced Lightning Web Components (LWC) development becomes imperative. This section of the guide immerses app builders in the intricacies of LWC, going beyond the basics to explore techniques that enhance scalability, maintainability, and overall development efficiency.

The journey into advanced LWC development begins with a focus on creating reusable components and modules. App builders discover how to design components that transcend individual use cases, fostering modularity and extensibility in their applications. By understanding the principles of component reusability, builders gain the tools to construct scalable and maintainable solutions that adapt to evolving business needs.

A significant emphasis is placed on navigating the complexities of data handling in LWC. This includes in-depth exploration of asynchronous operations, efficient client-side caching, and strategic server-side communication. App builders learn how to optimize data flow within their applications, ensuring responsiveness and minimizing latency for an optimal user experience.

The guide also delves into advanced topics such as event-driven architectures, enabling app builders to design applications that respond dynamically to user interactions and external events. Through real-world examples and best practices, builders gain insights into leveraging custom events, understanding the publish-subscribe pattern, and orchestrating seamless communication between Lightning Web Components.

App builders emerge with a heightened proficiency in advanced LWC development. Armed with the knowledge to create modular, efficient, and responsive applications, they are well-equipped to tackle the complexities of modern Salesforce development, delivering solutions that not only meet but exceed the expectations of users and stakeholders.

Optimizing Performance in Lightning Web Components

Performance optimization is a critical facet of Lightning Web Components (LWC) development, ensuring that Salesforce applications deliver a seamless and responsive user experience. This section of the guide delves into the strategies and techniques employed to maximize the efficiency of LWC applications, covering aspects from rendering speed to minimizing server calls.

The exploration begins with a focus on efficient rendering techniques, where developers gain insights into best practices for structuring components to enhance page load times. This includes understanding the lifecycle hooks in LWC, optimizing template structures, and employing techniques like lazy loading to prioritize critical content. By mastering these rendering optimizations, developers can significantly enhance the perceived performance of their applications.

A significant portion of the section is dedicated to minimizing Apex calls and optimizing server-side communication. Developers learn how to design LWC components that communicate efficiently with the server, reducing the payload and minimizing latency. Techniques such as caching and asynchronous operations are explored in detail, allowing developers to strike a balance between data freshness and performance.

Developers are equipped with a robust set of tools and techniques to optimize the performance of their Lightning Web Components. Whether it's through rendering optimizations, efficient server communication, or client-side strategies, this knowledge empowers developers to deliver Lightning-fast applications that meet the high standards of modern user expectations in the Salesforce

Integration and Extensibility in LWC

In the ever-evolving landscape of Salesforce development, the ability to seamlessly integrate Lightning Web Components (LWC) with various Salesforce technologies and extend their functionality is crucial for creating versatile and interconnected applications. This section of the guide provides a comprehensive exploration of the strategies and techniques that developers can employ to achieve robust integration and enhance the extensibility of their LWC solutions.

The integration journey begins by delving into the role of Apex controllers in connecting LWC components with the Salesforce server. Developers gain insights into leveraging server-side logic for data retrieval, manipulation, and other operations, fostering a cohesive and efficient data flow within their applications. Additionally, the guide explores the nuances of integrating LWC components into existing Lightning components, offering a roadmap for creating a unified user experience across diverse Salesforce functionalities.

Extensibility, a key aspect of effective application development, is addressed through a deep dive into the use of custom events in LWC. Developers learn how to implement event-driven architectures, enabling seamless communication and collaboration between components. This section also covers advanced topics such as dynamic component creation and composition, allowing developers to design flexible and adaptable solutions that can be easily extended to meet evolving business requirements.

This section equips developers with a holistic understanding of integration and extensibility in LWC, fostering the creation of applications that seamlessly connect within the Salesforce ecosystem while providing the flexibility to adapt and scale to the unique demands of diverse business landscapes. By mastering these integration and extensibility strategies, developers unlock the full potential of Lightning Web Components in building interconnected, dynamic, and future-proof Salesforce solutions.

How to obtain Salesforce Administrators and App Builders certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

Conclusion

In the journey of "Mastering Lightning Web Components: A Guide for Salesforce Administrators and App Builders," we have traversed the intricate landscape of LWC development, covering foundational concepts, declarative development, advanced techniques, performance optimization, and seamless integration. As we conclude this guide, it is evident that Lightning Web Components stand at the forefront of modern Salesforce development, offering a powerful and flexible framework for crafting dynamic and responsive applications.

Throughout the guide, administrators have gained insights into declarative development, learning how to wield the visual tools of Lightning App Builder and Community Builder to enhance user interfaces. App builders have delved into advanced LWC development, acquiring the skills to create modular, scalable, and innovative applications that transcend the basics. Developers have explored performance optimization strategies, ensuring their applications meet the high standards of responsiveness expected in the digital era. Integration and extensibility have been demystified, empowering developers to seamlessly connect LWC components within the Salesforce ecosystem and extend their functionality to meet diverse business needs.

As the Salesforce ecosystem continues to evolve, embracing Lightning Web Components as a cornerstone of development opens doors to innovation and agility. The guide's comprehensive coverage equips individuals to not only meet but exceed the expectations of users, stakeholders, and the dynamic Salesforce platform itself. Whether crafting intuitive user interfaces, optimizing for performance, or seamlessly integrating with external systems, the skills acquired in this guide are a testament to the empowerment that comes with mastering Lightning Web Components in the ever-expanding Salesforce universe.

5G and IoT: A Game-Changing Combo for Seamless Connectivity

The advent of 5G technology has marked a transformative era in the realm of connectivity, promising unprecedented speed, low latency, and enhanced network capabilities. This fifth-generation wireless technology goes beyond merely improving our browsing experiences; it forms the backbone of a digital revolution that extends its influence to various industries and technologies. One of the most promising synergies emerging from the 5G landscape is its integration with the Internet of Things (IoT), creating a powerful alliance that has the potential to redefine how devices communicate, share data, and contribute to a seamlessly connected world.

As we delve into the intersection of 5G and IoT, it becomes evident that their combined capabilities unlock a new dimension of possibilities. The Internet of Things, characterized by interconnected devices exchanging information and performing tasks autonomously, gains a significant boost with the high-speed, low-latency nature of 5G networks. This synergy is poised to propel the growth of smart cities, autonomous vehicles, industrial automation, and countless other applications that demand rapid and reliable data transmission.

Moreover, the amalgamation of 5G and IoT is not confined to elevating the speed of data transfer. It brings forth a paradigm shift in how devices interact and collaborate, fostering an environment where real-time communication becomes the norm rather than the exception. This real-time connectivity has far-reaching implications, from enabling critical applications in healthcare to enhancing the efficiency of supply chain management. The seamless integration of 5G and IoT creates a robust foundation for innovation, paving the way for novel solutions and services that were once constrained by the limitations of previous-generation networks.

In this exploration of the powerful combination of 5G and IoT, it is essential to recognize the potential challenges and considerations that come with such technological advancements. From security concerns to the need for standardized protocols, navigating the intricacies of this evolving landscape requires a holistic understanding of the implications and a commitment to addressing the associated complexities. As we navigate this transformative journey, the fusion of 5G and IoT stands as a beacon guiding us toward a future where connectivity transcends its current boundaries, opening doors to unparalleled possibilities in the digital age.

Table of contents

-

Technical Foundations of 5G and IoT Integration

-

Enhancing Industrial Processes with 5G and IoT

-

Smart Cities and Urban Connectivity

-

Security Challenges and Solutions

-

Consumer Electronics and 5G

-

Edge Computing in the 5G-IoT Ecosystem

-

Conclusion

Technical Foundations of 5G and IoT Integration

The integration of 5G and the Internet of Things (IoT) is underpinned by a robust set of technical foundations that collectively redefine the landscape of connectivity. At the core of this integration lies the high-speed data transfer capabilities of 5G networks. With significantly increased data rates compared to its predecessors, 5G facilitates the rapid exchange of information between IoT devices, enabling them to communicate seamlessly and efficiently. This enhanced data transfer speed is a critical factor in supporting the diverse range of applications and services that rely on real-time data processing.

Low latency, another key element of 5G's technical prowess, plays a pivotal role in the integration with IoT. The near-instantaneous response times achieved by 5G networks reduce communication delays between devices, a crucial requirement for applications demanding real-time interactions. This is particularly relevant in scenarios such as autonomous vehicles, where split-second decision-making is imperative for safety and efficiency. The low-latency aspect of 5G is thus instrumental in unlocking the full potential of IoT applications that necessitate swift and reliable communication.

The technical foundations of 5G and IoT integration rest upon the pillars of high-speed data transfer, low latency, and the adaptability afforded by network slicing. This synergy not only addresses the connectivity needs of current IoT applications but also lays the groundwork for the emergence of innovative and transformative technologies that will shape the future of interconnected devices and services.

Enhancing Industrial Processes with 5G and IoT

The integration of 5G and the Internet of Things (IoT) holds profound implications for the industrial landscape, ushering in a new era marked by heightened efficiency, precision, and automation. One of the primary ways this synergy enhances industrial processes is through the concept of smart factories. With 5G's high-speed data transfer and low latency, IoT-enabled devices within manufacturing plants can communicate in real-time, optimizing production processes and reducing downtime. From predictive maintenance of machinery to the coordination of robotic systems, the integration of 5G and IoT empowers industries to operate with unprecedented levels of agility and responsiveness.

Predictive maintenance, enabled by the continuous monitoring and analysis of equipment through IoT sensors, stands as a transformative application in industrial settings. The timely detection of potential issues allows for proactive maintenance, minimizing unplanned downtime and extending the lifespan of machinery. The real-time insights provided by 5G-connected IoT devices contribute to a paradigm shift from reactive to proactive maintenance strategies, fundamentally altering how industries manage their assets.

The marriage of 5G and IoT is a catalyst for the transformation of industrial processes. The seamless connectivity, high data speeds, and low latency provided by 5G empower IoT devices to revolutionize manufacturing and operational practices. As industries continue to embrace these technologies, the prospect of smart factories and agile, data-driven decision-making emerges as a cornerstone in the evolution of modern industrial processes.

Smart Cities and Urban Connectivity

The fusion of 5G and the Internet of Things (IoT) is reshaping the urban landscape, giving rise to the concept of smart cities characterized by interconnected and intelligent infrastructure. This transformative synergy addresses the growing challenges faced by urban centers and propels cities into a future where efficiency, sustainability, and quality of life are paramount. At the heart of this evolution is the enhancement of urban connectivity through the deployment of 5G networks and the integration of IoT devices.

In the realm of smart cities, 5G's high-speed data transfer capabilities and low latency redefine the way urban systems operate. From traffic management and public safety to energy distribution and waste management, the integration of IoT sensors and devices leverages 5G connectivity to enable real-time data collection and analysis. This real-time responsiveness facilitates adaptive urban planning, allowing city authorities to make informed decisions promptly and address emerging challenges swiftly.

The collaboration between 5G and IoT is at the forefront of revolutionizing urban connectivity, giving rise to smart cities that are efficient, sustainable, and responsive to the needs of their residents. As this integration continues to evolve, the vision of interconnected urban environments holds the promise of enhancing the quality of life, promoting sustainability, and addressing the complex challenges faced by modern cities.

Security Challenges and Solutions

The integration of 5G and the Internet of Things (IoT) brings forth a myriad of opportunities, but it also introduces a complex array of security challenges that demand careful consideration and innovative solutions. As the number of interconnected devices increases exponentially, so does the attack surface, making the entire ecosystem susceptible to various cyber threats. One prominent challenge is the vulnerability of IoT devices, often characterized by limited computational capabilities and inadequate security measures. These devices, ranging from smart home appliances to industrial sensors, can become entry points for malicious actors if not adequately protected.

The nature of 5G networks introduces additional security concerns, particularly in the context of their virtualized and software-driven architecture. The reliance on software-defined processes and virtualized network functions creates potential points of entry for cyber threats. Moreover, the increased complexity of 5G networks amplifies the difficulty of monitoring and securing the vast number of connections and devices, making it essential to fortify the network against potential breaches.

While the integration of 5G and IoT brings unprecedented connectivity, addressing security challenges is paramount to ensuring the reliability and integrity of these advanced networks. By adopting a holistic and collaborative approach that encompasses encryption, authentication, secure development practices, and ongoing vigilance, stakeholders can build a resilient foundation for the secure coexistence of 5G and IoT technologies.

Consumer Electronics and 5G

The marriage of 5G technology with the Internet of Things (IoT) is reshaping the landscape of consumer electronics, ushering in a new era of connectivity and innovation. 5G's high-speed data transfer capabilities and low latency are revolutionizing the way consumers interact with their electronic devices, from smart homes to wearables and beyond. In the realm of consumer electronics, the integration of 5G brings forth a paradigm shift, offering enhanced experiences, increased device interactivity, and a broader scope of applications.

One of the notable impacts of 5G on consumer electronics is evident in the realm of smart homes. With the proliferation of IoT devices within households, ranging from smart thermostats and security cameras to connected appliances, 5G provides the bandwidth and responsiveness required for these devices to communicate seamlessly. Home automation becomes more sophisticated, allowing users to control and monitor various aspects of their homes remotely. The integration of 5G enables near-instantaneous responses, creating a more responsive and interactive smart home environment.

Entertainment experiences are elevated through 5G connectivity in consumer electronics. Streaming high-definition content, immersive virtual reality (VR), and augmented reality (AR) applications become more seamless and responsive, offering users a more immersive and engaging entertainment landscape. The integration of 5G enables faster downloads, reduced lag, and an overall improvement in the quality of multimedia experiences.

The fusion of 5G and consumer electronics marks a transformative moment in the way individuals interact with their devices. The high-speed, low-latency capabilities of 5G contribute to the evolution of smart homes, wearables, and entertainment systems, offering users enhanced connectivity, responsiveness, and a plethora of innovative applications. As consumer electronics continue to evolve within this interconnected ecosystem, the synergy between 5G and IoT is poised to redefine the possibilities of daily technological interactions.

Edge Computing in the 5G-IoT Ecosystem

Edge computing plays a pivotal role in the seamless integration of 5G and the Internet of Things (IoT), ushering in a paradigm shift in how data is processed and utilized within this dynamic ecosystem. At its core, edge computing involves the decentralization of computing resources, bringing data processing closer to the source of data generation. In the context of the 5G-IoT ecosystem, this approach becomes increasingly critical as it addresses the need for real-time data analysis, reduced latency, and efficient bandwidth utilization.

One of the key advantages of edge computing in the 5G-IoT landscape is its ability to alleviate latency concerns. By processing data closer to where it is generated, edge computing significantly reduces the time it takes for information to travel from IoT devices to centralized data centers and back. This is particularly crucial for applications that demand near-instantaneous responses, such as autonomous vehicles, smart grids, and industrial automation. The low-latency benefits of edge computing contribute to enhanced system responsiveness and overall efficiency.

The security implications of edge computing in the 5G-IoT ecosystem are noteworthy as well. By processing sensitive data locally, at the edge, organizations can mitigate potential risks associated with transmitting sensitive information across networks. This localized approach to data processing enhances privacy and security, aligning with the growing concerns surrounding the protection of IoT-generated data.

How to obtain Devops certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO