Quick Enquiry Form

Categories

- Agile and Scrum (216)

- BigData (32)

- Business Analysis (93)

- Cirtix Client Administration (53)

- Cisco (63)

- Cloud Technology (91)

- Cyber Security (54)

- Data Science and Business Intelligence (48)

- Developement Courses (53)

- DevOps (15)

- Digital Marketing (56)

- Emerging Technology (190)

- IT Service Management (76)

- Microsoft (54)

- Other (395)

- Project Management (491)

- Quality Management (139)

- salesforce (67)

Latest posts

Introduction to SAP Sales and..

Before You Take the PMP..

5 High Demand Cybersecurity Careers..

Free Resources

Subscribe to Newsletter

10 Unstoppable Reasons to Opt for Big Data

Big Data is omnipresent, and it is really essential to capture and store all the data being generated, so we do not miss out on something significant. There is ample data out there, and what we do with it is most important. That is why Big Data Analytics is really crucial in technology. It assists businesses in making improved decisions and provides them with a competitive advantage. This is applicable for businesses as well as individuals working in the Analytics sector. For instance, individuals who understand Big Data and Hadoop have a plethora of job opportunities. For instance, in the online lending sector, Big Data assists lenders in making faster and better decisions, which enhances the experience of individuals who apply for a loan.

Why Big Data Analytics is a Wonderful Career Choice ?

If you are still in doubt as to why Big Data Analytics is a sought-after skill, here are 10 additional reasons to help you comprehend it better.

1. Analytics Workers: As Jeanne Harris, a senior Accenture Institute for High Performance executive, says, "data is useless without the skill to analyze it." Today, there are more Big Data and Analytics careers than ever. IT professionals are prepared to spend money and time to learn these skills because they realize it is a good career choice for the future.

The need for data professionals will only just start. Srikanth Velamakanni, ceo and cofounder of Bangalore-based Fractal Analytics, forecasts that in the next few years, the analytics market will grow to at least one-third the size of the worldwide IT market, now one-tenth.

Technology experts who are experienced in analytics are in demand because companies want to take advantage of Big Data. Job listings for analytics on platforms like Indeed and Dice have increased dramatically in the past year. Other job platforms are seeing the same growth. This is happening because more companies are beginning to use analytics and need staff with such knowledge.

QuinStreet Inc. carried out a survey and found that Big Data Analytics is becoming highly important for the majority of firms in America. They are either already using it or planning to use it in the next two years. If you want to learn more about Big Data and how it is being applied, you can look at online Data Engineering Courses.

2. Large Job Opportunities and the Closing of the Skills Gap:

There is more demand for analytics professionals, but no one with the right training is around to meet that demand. It is occurring everywhere globally and not in a particular location. Although Big Data Analytics is a lucrative job, most of the jobs remain unfilled because there are not enough professionals who have the right education. McKinsey Global Institute reports that by 2018 America will require nearly 190,000 data scientists and 1.5 million managers and analysts who have the ability to comprehend and make decisions from Big Data.

In case you want to know more about Data Science, you can register for a live Data Science Certification Training with iCert Global, which comes with 24/7 support and lifetime access.

3. Salary Details:

Its high demand for Data Analytics experts is driving salaries upwards for competent staff, making Big Data a viable career for those who have the right skills. It is occurring on a global scale, as nations such as Australia and the U.K. are witnessing staggering salary growth.

The Institute of Analytics Professionals of Australia (IAPA) 2015 Skills and Salary Survey Report indicates that data analysts have an average salary of $130,000 per year, an increase of 4% from the previous year. The average data analyst salary for the past few years has been around 184% of the average full-time employee salary in Australia. The outlook for analytics professionals can also be estimated from the membership of IAPA, which has reached over 5,000 members in Australia since its founding in 2006.

Randstad cites that the increase in yearly salary for Analytics employees in India is, on average, 50% more than other IT employees. As per the Great Lakes Institute of Management Indian Analytics Industry Salary Trend Report, salaries for analytics personnel in India increased by 21% in 2015 from 2014. As per the report, 14% of overall analytics personnel earn more than Rs. 15 lakh per annum.

The U.K. salary trend for Big Data Analytics is also increasing very fast and positively. A search on Itjobswatch.co.uk in early 2016 indicated that the average salary for Big Data Analytics job advertisements was £62,500, whereas in early 2015 it was £55,000 for the same jobs. The salary increased 13.63% year on year.

4. Big Data Analytics: Extremely Critical for Most Organizations

The 'Peer Research – Big Data Analytics' report indicates that big data analytics is extremely crucial to the majority of organizations. They believe it assists them in improving and succeeding.

Based on the survey answers, approximately 45% of the respondents hold the view that Big Data Analytics will help companies gain better insights. Another 38% wish to utilize Analytics to uncover sales and market opportunities. Over 60% of the respondents are utilizing Big Data Analytics to leverage their social media marketing. QuinStreet's findings also show that Analytics is extremely significant, with 77% of individuals stating that Big Data Analytics is extremely significant.

A Deloitte survey, titled Technology in the Mid-Market; Perspectives and Priorities, says that most leaders perceive the value of analytics. Based on the survey, 65.2% of the individuals already use some form of analytics to advance their businesses. The following image depicts their strong faith in Big Data Analytics. To learn more about Big Data and its application, refer to the Azure Data Engineering Course in India.

5. There are more Big Data Analytics users:

New technologies are making it easier for individuals to conduct sophisticated data analysis on large and varied sets of data. It has come to be learned through a survey conducted by The Data Warehousing Institute (TDWI) that over one-third of the individuals surveyed are already using advanced analytics on Big Data for purposes like Business Intelligence, Predictive Analytics, and Data Mining.

Big Data Analytics enables organizations to perform at a higher level than their peers, and thus, there are increasingly more companies that are beginning to utilize the appropriate tools at the appropriate time. Most of the respondents to the 'Peer Research – Big Data Analytics' survey already have a plan to implement these tools. The others are working hard to develop one.

The Apache Hadoop framework is the most popular. There is a paid version and a free version, and companies select the one they prefer. More than half of the respondents have started using or plan to use a version of Hadoop. Of these, a quarter have chosen the free, open-source version of Hadoop, which is twice the number of companies that chose the paid version.

6. Analytics: A Key Component of Decision Making

Analytics is the most critical for the majority of organizations, and everyone is unanimous on this point. As per the 'Analytics Advantage' survey, 96% of respondents are of the opinion that analytics will be more critical in the next three years. This is because there is plenty of untapped data, and currently, only basic analytics is being executed. Around 49% of the individuals interviewed firmly opined that analytics makes better decisions. Another 16% opine that it's great to make better crucial plans.

7. The Emergence of Unstructured and Semistructured Data Analytics

The 'Peer Research – Big Data Analytics' survey indicates that businesses are rapidly expanding the application of unstructured and semistructured data analytics. 84% of the participants reported that their businesses already process and analyze unstructured data like weblogs, social media, emails, photos, and videos. The other participants reported that their businesses will begin to use these sources of data within the next 12 to 18 months.

8. Big Data Analytics is Used Everywhere!

There exists a huge demand for Big Data Analytics due to its incredible characteristics. Big Data Analytics is growing as it is applied to numerous different fields. The following is the image depicting the job opportunities in various fields where Big Data is applied.

9. Defying Market Projections for Big Data Analytics

Big Data Analytics has been labeled the most disruptive technology by the Nimbus Ninety survey. That is, it will make a huge impact within the next three years. There are some other market forecasts that confirm the same:

• The IIA says Big Data Analytics solutions will help enhance security by utilizing machine learning, text mining, and other mechanisms to predict, identify, and circumvent threats.

A survey named The Future of Big Data Analytics – Global Market and Technologies Forecast – 2015-2020 shows that the global market is expected to grow by 14.4% annually from 2015 through 2020.

• The Apps and Analytics Technology Big Data Analytics market is projected to grow at 28.2% annually, Cloud Technology at 16.1%, Computing Technology at 7.1%, and NoSQL Technology at 18.9% during the same period.

10. Many Options of Job Titles and Analytics Types:

From a career point of view, there are numerous options for the job that you do and the industry you do it in. Since Analytics is applied to numerous industries, there are numerous different job roles to choose from, including:

• Big Data Analytics Business Consultant

• Big Data Analytics Architect

• Big Data Engineer

• Big Data Solution Architect

• Big Data Analyst

• Analytics Associate

• Business Intelligence and Analytics Consultant

• Metrics and Analytics Specialist

Big Data Analytics professions are diverse, and you can select any of the three forms of data analytics depending on the Big Data environment:

• Prescriptive Analytics

• Predictive Analytics

• Descriptive Analytics

Numerous organizations, such as IBM, Microsoft, Oracle, and more, are applying Big Data Analytics to their business requirements. Due to this, numerous job opportunities exist with these organizations. Conclusion: Although analytics may be tricky, it does not eliminate the requirement for human judgment. That is, in fact, businesses require professionals with analytics certification to interpret data, consider business requirements, and deliver actionable insights. That is why professionals with analytics certification are in huge demand since companies desire to utilize the advantages of Big Data. If you wish to be a specialist, you may enroll in courses such as iCert Global's Data Architect course. The course trains in Hadoop, MapReduce, Pig, Hive, and many more. A professional with analytical skills can comprehend Big Data and become a productive employee of a business, contributing to their career and the business as well.

How to obtain Cloud Computing certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion:

In conclusion, Big Data is becoming a huge part of many industries, creating lots of job opportunities and offering great salaries for people with the right skills. As businesses rely more on data to make important decisions, there is a growing demand for professionals who can analyze and understand that data. By learning about Big Data and getting certified, such as through courses like iCert Global’s Data Architect course, you can unlock many career paths in tech and business. So, if you're interested in working with data and solving problems, Big Data is a great field to explore for your future career!

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Read More

Big Data is omnipresent, and it is really essential to capture and store all the data being generated, so we do not miss out on something significant. There is ample data out there, and what we do with it is most important. That is why Big Data Analytics is really crucial in technology. It assists businesses in making improved decisions and provides them with a competitive advantage. This is applicable for businesses as well as individuals working in the Analytics sector. For instance, individuals who understand Big Data and Hadoop have a plethora of job opportunities. For instance, in the online lending sector, Big Data assists lenders in making faster and better decisions, which enhances the experience of individuals who apply for a loan.

Why Big Data Analytics is a Wonderful Career Choice ?

If you are still in doubt as to why Big Data Analytics is a sought-after skill, here are 10 additional reasons to help you comprehend it better.

1. Analytics Workers: As Jeanne Harris, a senior Accenture Institute for High Performance executive, says, "data is useless without the skill to analyze it." Today, there are more Big Data and Analytics careers than ever. IT professionals are prepared to spend money and time to learn these skills because they realize it is a good career choice for the future.

The need for data professionals will only just start. Srikanth Velamakanni, ceo and cofounder of Bangalore-based Fractal Analytics, forecasts that in the next few years, the analytics market will grow to at least one-third the size of the worldwide IT market, now one-tenth.

Technology experts who are experienced in analytics are in demand because companies want to take advantage of Big Data. Job listings for analytics on platforms like Indeed and Dice have increased dramatically in the past year. Other job platforms are seeing the same growth. This is happening because more companies are beginning to use analytics and need staff with such knowledge.

QuinStreet Inc. carried out a survey and found that Big Data Analytics is becoming highly important for the majority of firms in America. They are either already using it or planning to use it in the next two years. If you want to learn more about Big Data and how it is being applied, you can look at online Data Engineering Courses.

2. Large Job Opportunities and the Closing of the Skills Gap:

There is more demand for analytics professionals, but no one with the right training is around to meet that demand. It is occurring everywhere globally and not in a particular location. Although Big Data Analytics is a lucrative job, most of the jobs remain unfilled because there are not enough professionals who have the right education. McKinsey Global Institute reports that by 2018 America will require nearly 190,000 data scientists and 1.5 million managers and analysts who have the ability to comprehend and make decisions from Big Data.

In case you want to know more about Data Science, you can register for a live Data Science Certification Training with iCert Global, which comes with 24/7 support and lifetime access.

3. Salary Details:

Its high demand for Data Analytics experts is driving salaries upwards for competent staff, making Big Data a viable career for those who have the right skills. It is occurring on a global scale, as nations such as Australia and the U.K. are witnessing staggering salary growth.

The Institute of Analytics Professionals of Australia (IAPA) 2015 Skills and Salary Survey Report indicates that data analysts have an average salary of $130,000 per year, an increase of 4% from the previous year. The average data analyst salary for the past few years has been around 184% of the average full-time employee salary in Australia. The outlook for analytics professionals can also be estimated from the membership of IAPA, which has reached over 5,000 members in Australia since its founding in 2006.

Randstad cites that the increase in yearly salary for Analytics employees in India is, on average, 50% more than other IT employees. As per the Great Lakes Institute of Management Indian Analytics Industry Salary Trend Report, salaries for analytics personnel in India increased by 21% in 2015 from 2014. As per the report, 14% of overall analytics personnel earn more than Rs. 15 lakh per annum.

The U.K. salary trend for Big Data Analytics is also increasing very fast and positively. A search on Itjobswatch.co.uk in early 2016 indicated that the average salary for Big Data Analytics job advertisements was £62,500, whereas in early 2015 it was £55,000 for the same jobs. The salary increased 13.63% year on year.

4. Big Data Analytics: Extremely Critical for Most Organizations

The 'Peer Research – Big Data Analytics' report indicates that big data analytics is extremely crucial to the majority of organizations. They believe it assists them in improving and succeeding.

Based on the survey answers, approximately 45% of the respondents hold the view that Big Data Analytics will help companies gain better insights. Another 38% wish to utilize Analytics to uncover sales and market opportunities. Over 60% of the respondents are utilizing Big Data Analytics to leverage their social media marketing. QuinStreet's findings also show that Analytics is extremely significant, with 77% of individuals stating that Big Data Analytics is extremely significant.

A Deloitte survey, titled Technology in the Mid-Market; Perspectives and Priorities, says that most leaders perceive the value of analytics. Based on the survey, 65.2% of the individuals already use some form of analytics to advance their businesses. The following image depicts their strong faith in Big Data Analytics. To learn more about Big Data and its application, refer to the Azure Data Engineering Course in India.

5. There are more Big Data Analytics users:

New technologies are making it easier for individuals to conduct sophisticated data analysis on large and varied sets of data. It has come to be learned through a survey conducted by The Data Warehousing Institute (TDWI) that over one-third of the individuals surveyed are already using advanced analytics on Big Data for purposes like Business Intelligence, Predictive Analytics, and Data Mining.

Big Data Analytics enables organizations to perform at a higher level than their peers, and thus, there are increasingly more companies that are beginning to utilize the appropriate tools at the appropriate time. Most of the respondents to the 'Peer Research – Big Data Analytics' survey already have a plan to implement these tools. The others are working hard to develop one.

The Apache Hadoop framework is the most popular. There is a paid version and a free version, and companies select the one they prefer. More than half of the respondents have started using or plan to use a version of Hadoop. Of these, a quarter have chosen the free, open-source version of Hadoop, which is twice the number of companies that chose the paid version.

6. Analytics: A Key Component of Decision Making

Analytics is the most critical for the majority of organizations, and everyone is unanimous on this point. As per the 'Analytics Advantage' survey, 96% of respondents are of the opinion that analytics will be more critical in the next three years. This is because there is plenty of untapped data, and currently, only basic analytics is being executed. Around 49% of the individuals interviewed firmly opined that analytics makes better decisions. Another 16% opine that it's great to make better crucial plans.

7. The Emergence of Unstructured and Semistructured Data Analytics

The 'Peer Research – Big Data Analytics' survey indicates that businesses are rapidly expanding the application of unstructured and semistructured data analytics. 84% of the participants reported that their businesses already process and analyze unstructured data like weblogs, social media, emails, photos, and videos. The other participants reported that their businesses will begin to use these sources of data within the next 12 to 18 months.

8. Big Data Analytics is Used Everywhere!

There exists a huge demand for Big Data Analytics due to its incredible characteristics. Big Data Analytics is growing as it is applied to numerous different fields. The following is the image depicting the job opportunities in various fields where Big Data is applied.

9. Defying Market Projections for Big Data Analytics

Big Data Analytics has been labeled the most disruptive technology by the Nimbus Ninety survey. That is, it will make a huge impact within the next three years. There are some other market forecasts that confirm the same:

• The IIA says Big Data Analytics solutions will help enhance security by utilizing machine learning, text mining, and other mechanisms to predict, identify, and circumvent threats.

A survey named The Future of Big Data Analytics – Global Market and Technologies Forecast – 2015-2020 shows that the global market is expected to grow by 14.4% annually from 2015 through 2020.

• The Apps and Analytics Technology Big Data Analytics market is projected to grow at 28.2% annually, Cloud Technology at 16.1%, Computing Technology at 7.1%, and NoSQL Technology at 18.9% during the same period.

10. Many Options of Job Titles and Analytics Types:

From a career point of view, there are numerous options for the job that you do and the industry you do it in. Since Analytics is applied to numerous industries, there are numerous different job roles to choose from, including:

• Big Data Analytics Business Consultant

• Big Data Analytics Architect

• Big Data Engineer

• Big Data Solution Architect

• Big Data Analyst

• Analytics Associate

• Business Intelligence and Analytics Consultant

• Metrics and Analytics Specialist

Big Data Analytics professions are diverse, and you can select any of the three forms of data analytics depending on the Big Data environment:

• Prescriptive Analytics

• Predictive Analytics

• Descriptive Analytics

Numerous organizations, such as IBM, Microsoft, Oracle, and more, are applying Big Data Analytics to their business requirements. Due to this, numerous job opportunities exist with these organizations. Conclusion: Although analytics may be tricky, it does not eliminate the requirement for human judgment. That is, in fact, businesses require professionals with analytics certification to interpret data, consider business requirements, and deliver actionable insights. That is why professionals with analytics certification are in huge demand since companies desire to utilize the advantages of Big Data. If you wish to be a specialist, you may enroll in courses such as iCert Global's Data Architect course. The course trains in Hadoop, MapReduce, Pig, Hive, and many more. A professional with analytical skills can comprehend Big Data and become a productive employee of a business, contributing to their career and the business as well.

How to obtain Cloud Computing certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion:

In conclusion, Big Data is becoming a huge part of many industries, creating lots of job opportunities and offering great salaries for people with the right skills. As businesses rely more on data to make important decisions, there is a growing demand for professionals who can analyze and understand that data. By learning about Big Data and getting certified, such as through courses like iCert Global’s Data Architect course, you can unlock many career paths in tech and business. So, if you're interested in working with data and solving problems, Big Data is a great field to explore for your future career!

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Best Data Engineering Projects for Hands On Learning

Data engineering projects can be complex and require proper planning and collaboration. To achieve the best outcome, it is necessary to define precise objectives and have a clear understanding of how each component works in conjunction with one another.

There are a lot of tools that assist data engineers in streamlining their work and ensuring that everything goes smoothly. But despite these tools, ensuring that everything works correctly still consumes a lot of time.

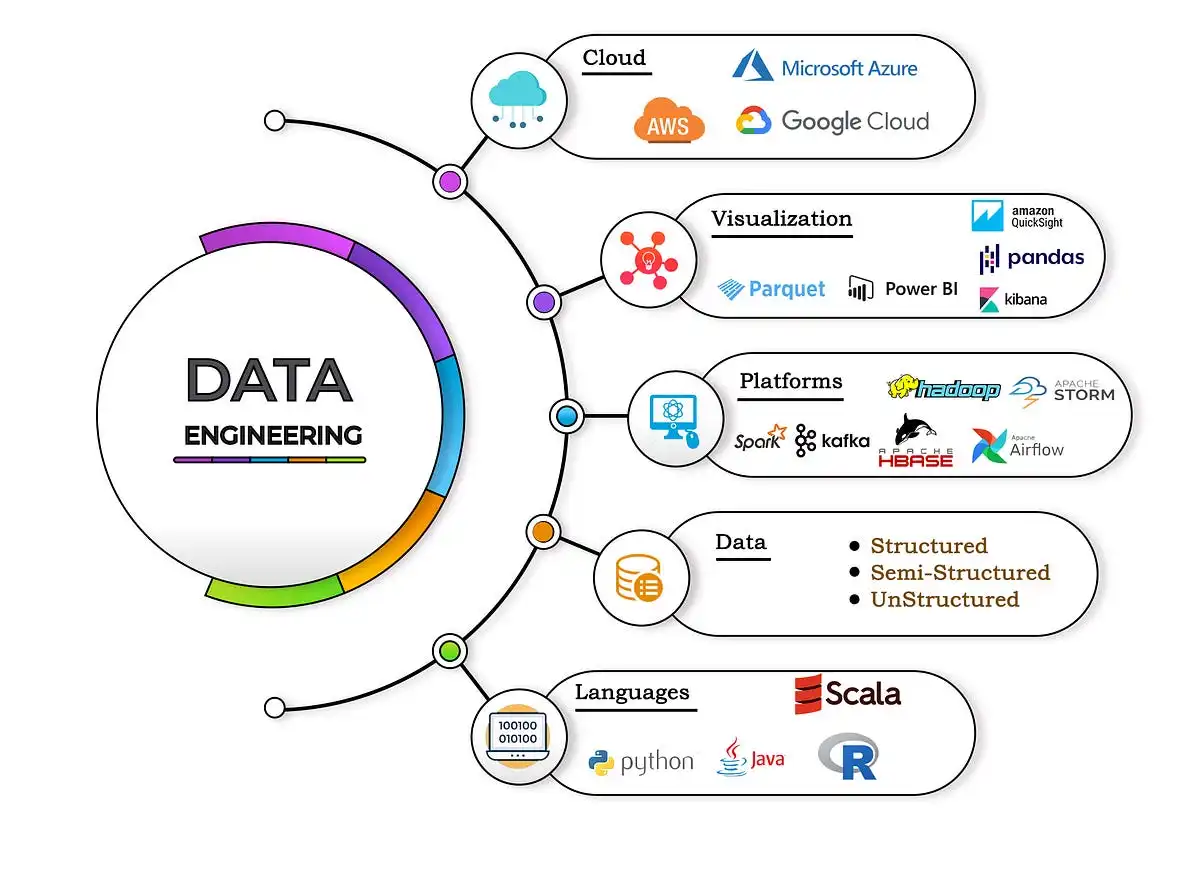

What Is Data Engineering?

Data engineering refers to structuring and preparing data. This makes it easy for other systems to utilize it. It usually involves making or modifying databases. You also need to have the data ready to use whenever you need it, regardless of how it was gathered or stored.

Data engineers examine data to discover patterns. They apply these findings to develop new tools and systems. They assist companies by transforming raw data into valuable information in the form of reports.

Top 10 Data Engineering Projects

Project work assists beginners in learning data engineering. It allows them to apply new skills and create a portfolio that impresses employers. Below are 10 data engineering projects for beginners. Each project has a brief description, objectives, skills you will acquire, and the tools you can use.

1. Data Collection and Storage System

Project Overview: Develop a system to collect data from websites and APIs. Clean the data and store it in a database.

Goals:

- Learn how to collect data from different sources.

- Understand how to clean and prepare data.

- Store data in a structured way using a database.

Skills You’ll Learn: API usage, web scraping, data cleaning, SQL.

Tools & Technologies: Python (Requests, BeautifulSoup), SQL databases (MySQL, PostgreSQL), Pandas.

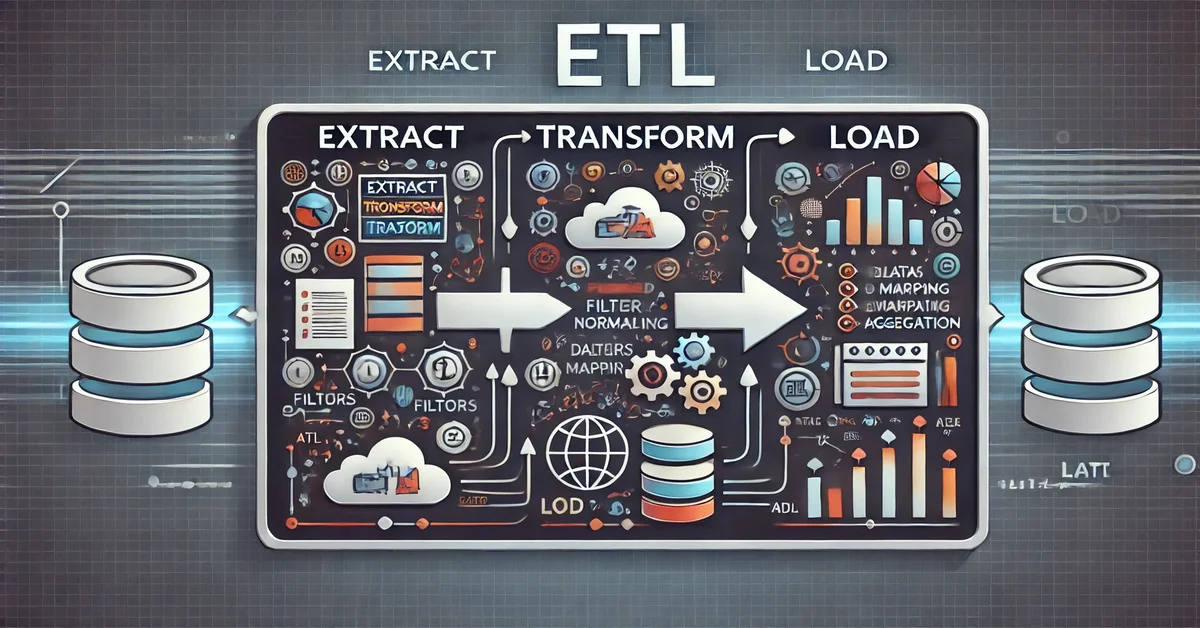

2. ETL Pipeline

Project Overview: Build an ETL (Extract, Transform, Load) pipeline. This pipeline will take data from a source, process it, and then load it into a database.

Goals:

- Understand ETL processes and workflows.

- Learn how to change and organize data.

- Automate the process of moving data.

Skills You’ll Learn: Data modeling, batch processing, automation.

Tools & Technologies: Python, SQL, Apache Airflow.

3. Real-Time Data Processing System

Project Overview: Develop a system to handle live data from social media and IoT devices.

Goals:

- Learn the basics of real-time data processing.

- Work with streaming data.

- Perform simple analysis on live data.

Skills You’ll Learn: Stream processing, real-time analytics, event-driven programming.

Tools & Technologies: Apache Kafka, Apache Spark Streaming.

4. Data Warehouse Solution

Project Overview: Create a data warehouse. It will collect data from various sources. This makes reporting and analysis easy.

Goals:

- Learn how data warehouses work.

- Design data structures for organizing and analyzing data.

- Work with popular data warehouse tools.

Skills You’ll Learn: Data warehousing, OLAP (Online Analytical Processing), data modeling.

Tools & Technologies: Amazon Redshift, Google BigQuery, Snowflake.

5. Data Quality Monitoring System

Project Overview: Create a system to identify and report data problems. This includes missing values, duplicate records, and inconsistencies.

Goals:

- Understand why data quality is important.

- Learn how to track and fix data problems.

- Create reports to monitor data quality.

Skills You’ll Learn: Data quality assessment, reporting, automation.

Tools & Technologies: Python, SQL, Apache Airflow.

6. Log Analysis Tool

Project Overview: Build a tool to analyze log files from websites or apps. This tool will help identify patterns in user behavior and system performance.

Goals:

- Learn to read and analyze log data.

- Identify trends and patterns.

- Show results using data visualization.

Skills You’ll Learn: Log analysis, pattern recognition, data visualization.

Tools & Technologies: Elasticsearch, Logstash, Kibana (ELK stack).

7. Recommendation System

Project Overview: Create a system that recommends items to users. It will use their past choices and preferences from similar users.

Goals:

- Understand how recommendation algorithms work.

- Use filtering techniques to suggest relevant content.

- Measure how effective your recommendations are.

Skills You’ll Learn: Machine learning, algorithm implementation, evaluation metrics.

Tools & Technologies: Python (Pandas, Scikit-learn), Apache Spark MLlib.

8. Sentiment Analysis on Social Media Data

Project Overview: Develop a tool that analyzes social media posts. It will classify them as positive, negative, or neutral.

Goals:

- Work with text-based data.

- Learn how sentiment analysis works.

- Display the results visually.

Skills You’ll Learn: Natural Language Processing (NLP), sentiment analysis, data visualization.

Tools & Technologies: Python (NLTK, TextBlob), Jupyter Notebooks.

9. IoT Data Analysis

Project Overview: Analyze data from smart devices (like home sensors) to find usage trends, detect unusual activity, or predict maintenance needs.

Goals:

- Handle data from IoT devices.

- Work with time-series data.

- Detect issues and predict trends.

Skills You’ll Learn: Time-series analysis, anomaly detection, predictive modeling.

Tools & Technologies: Python (Pandas, NumPy), TensorFlow, Apache Kafka.

10. Climate Data Analysis Platform

Project Overview: Create a system to gather, process, and display climate data. This will help us spot trends and unusual patterns.

Goals:

- Work with large climate datasets.

- Learn to visualize environmental data.

- Present complex data in an easy-to-understand way.

Skills You'll Acquire: Data processing, visualization, environmental analysis.

Tools & Technologies: Python (Matplotlib, Seaborn), R, D3.js.

How to obtain Quality Managemt certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2024 are:

Conclusion

Want to grow professionally in data engineering? The Professional Certificate Program in Data Engineering from iCert Global and Purdue University enables you to become proficient in big data, cloud computing, and data pipelines.

Develop skills in Apache Spark, Hadoop, AWS, and Python. Do so through hands-on projects, live case studies, and training by experts. This certification develops your skills and increases your credibility as a software professional, data engineer, or data analyst. You can become a top talent in the industry through it.

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Read More

Data engineering projects can be complex and require proper planning and collaboration. To achieve the best outcome, it is necessary to define precise objectives and have a clear understanding of how each component works in conjunction with one another.

There are a lot of tools that assist data engineers in streamlining their work and ensuring that everything goes smoothly. But despite these tools, ensuring that everything works correctly still consumes a lot of time.

What Is Data Engineering?

Data engineering refers to structuring and preparing data. This makes it easy for other systems to utilize it. It usually involves making or modifying databases. You also need to have the data ready to use whenever you need it, regardless of how it was gathered or stored.

Data engineers examine data to discover patterns. They apply these findings to develop new tools and systems. They assist companies by transforming raw data into valuable information in the form of reports.

Top 10 Data Engineering Projects

Project work assists beginners in learning data engineering. It allows them to apply new skills and create a portfolio that impresses employers. Below are 10 data engineering projects for beginners. Each project has a brief description, objectives, skills you will acquire, and the tools you can use.

1. Data Collection and Storage System

Project Overview: Develop a system to collect data from websites and APIs. Clean the data and store it in a database.

Goals:

- Learn how to collect data from different sources.

- Understand how to clean and prepare data.

- Store data in a structured way using a database.

Skills You’ll Learn: API usage, web scraping, data cleaning, SQL.

Tools & Technologies: Python (Requests, BeautifulSoup), SQL databases (MySQL, PostgreSQL), Pandas.

2. ETL Pipeline

Project Overview: Build an ETL (Extract, Transform, Load) pipeline. This pipeline will take data from a source, process it, and then load it into a database.

Goals:

- Understand ETL processes and workflows.

- Learn how to change and organize data.

- Automate the process of moving data.

Skills You’ll Learn: Data modeling, batch processing, automation.

Tools & Technologies: Python, SQL, Apache Airflow.

3. Real-Time Data Processing System

Project Overview: Develop a system to handle live data from social media and IoT devices.

Goals:

- Learn the basics of real-time data processing.

- Work with streaming data.

- Perform simple analysis on live data.

Skills You’ll Learn: Stream processing, real-time analytics, event-driven programming.

Tools & Technologies: Apache Kafka, Apache Spark Streaming.

4. Data Warehouse Solution

Project Overview: Create a data warehouse. It will collect data from various sources. This makes reporting and analysis easy.

Goals:

- Learn how data warehouses work.

- Design data structures for organizing and analyzing data.

- Work with popular data warehouse tools.

Skills You’ll Learn: Data warehousing, OLAP (Online Analytical Processing), data modeling.

Tools & Technologies: Amazon Redshift, Google BigQuery, Snowflake.

5. Data Quality Monitoring System

Project Overview: Create a system to identify and report data problems. This includes missing values, duplicate records, and inconsistencies.

Goals:

- Understand why data quality is important.

- Learn how to track and fix data problems.

- Create reports to monitor data quality.

Skills You’ll Learn: Data quality assessment, reporting, automation.

Tools & Technologies: Python, SQL, Apache Airflow.

6. Log Analysis Tool

Project Overview: Build a tool to analyze log files from websites or apps. This tool will help identify patterns in user behavior and system performance.

Goals:

- Learn to read and analyze log data.

- Identify trends and patterns.

- Show results using data visualization.

Skills You’ll Learn: Log analysis, pattern recognition, data visualization.

Tools & Technologies: Elasticsearch, Logstash, Kibana (ELK stack).

7. Recommendation System

Project Overview: Create a system that recommends items to users. It will use their past choices and preferences from similar users.

Goals:

- Understand how recommendation algorithms work.

- Use filtering techniques to suggest relevant content.

- Measure how effective your recommendations are.

Skills You’ll Learn: Machine learning, algorithm implementation, evaluation metrics.

Tools & Technologies: Python (Pandas, Scikit-learn), Apache Spark MLlib.

8. Sentiment Analysis on Social Media Data

Project Overview: Develop a tool that analyzes social media posts. It will classify them as positive, negative, or neutral.

Goals:

- Work with text-based data.

- Learn how sentiment analysis works.

- Display the results visually.

Skills You’ll Learn: Natural Language Processing (NLP), sentiment analysis, data visualization.

Tools & Technologies: Python (NLTK, TextBlob), Jupyter Notebooks.

9. IoT Data Analysis

Project Overview: Analyze data from smart devices (like home sensors) to find usage trends, detect unusual activity, or predict maintenance needs.

Goals:

- Handle data from IoT devices.

- Work with time-series data.

- Detect issues and predict trends.

Skills You’ll Learn: Time-series analysis, anomaly detection, predictive modeling.

Tools & Technologies: Python (Pandas, NumPy), TensorFlow, Apache Kafka.

10. Climate Data Analysis Platform

Project Overview: Create a system to gather, process, and display climate data. This will help us spot trends and unusual patterns.

Goals:

- Work with large climate datasets.

- Learn to visualize environmental data.

- Present complex data in an easy-to-understand way.

Skills You'll Acquire: Data processing, visualization, environmental analysis.

Tools & Technologies: Python (Matplotlib, Seaborn), R, D3.js.

How to obtain Quality Managemt certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2024 are:

Conclusion

Want to grow professionally in data engineering? The Professional Certificate Program in Data Engineering from iCert Global and Purdue University enables you to become proficient in big data, cloud computing, and data pipelines.

Develop skills in Apache Spark, Hadoop, AWS, and Python. Do so through hands-on projects, live case studies, and training by experts. This certification develops your skills and increases your credibility as a software professional, data engineer, or data analyst. You can become a top talent in the industry through it.

Contact Us For More Information:

Visit : www.icertglobal.com Email : info@icertglobal.com

Exploring Data Processing Key Types and Examples

Every time you use the internet to learn about something, make an online payment, order food, or do anything else, data is created. Social media, online shopping, and streaming videos have all contributed to a huge increase in the amount of data we generate. To make sense of all this data, we use something called data processing. Let’s explore what data processing is and how it works.

What Is Data Processing?

Raw data, or data in its unorganized form, isn’t very helpful to anyone. Data processing is the process of turning this raw data into useful information. This is done in a series of steps by a team of people, like data scientists and engineers, who work together in a company. First, the raw data is collected. Then, it’s filtered, sorted, analyzed, and stored before being shown in an easy-to-understand format.

Data processing is very important for businesses because it helps them make better decisions and stay ahead of the competition. When the data is turned into charts, graphs, or reports, people in the company can easily understand and use it.

Now that we know what data processing is, let’s look at how the data processing cycle works.

Step 1: Collection

The first step in the data processing cycle is collecting raw data. The type of data you gather is really important because it affects the final results. It’s important to get data from reliable and accurate sources so the results are correct and useful. Raw data can include things like money numbers, website information, company profit or loss, and user activity.

Step 2: Preparation

Next comes data preparation, also known as data cleaning. This is when the raw data is sorted and checked to remove mistakes or unnecessary information. The data is checked for errors, duplicates, missing details, or wrong information. The goal is to make sure the data is in the best possible shape for the next steps. By cleaning up the data, we get rid of anything that could mess up the final results, ensuring that only good quality data is used.

Step 3: Input

Once the data is ready, it has to be turned into a format that computers can understand. This is called the input step. The data can be entered into the computer using a keyboard, scanner, or other tools that send the data to the system.

Step 4: Data Processing

This step is when the actual work happens. The raw data is processed using different methods like machine learning or artificial intelligence (AI) to turn it into useful information. Depending on where the data is coming from (like databases or connected devices) and what it will be used for, the process might look a little different.

Step 5: Output

After processing, the data is shown to the user in an easy-to-understand form, like graphs, tables, videos, documents, or even sound. This output can be saved and used later in another round of data processing if needed.

Step 6: Storage

The final step is storing the data. In this step, the processed data is saved in a place where it can be quickly accessed later. This storage also makes it easy to use the data again in the next data processing cycle.

Now that we understand data processing and its steps, let's take a look at the different types of data processing.

Data Processing is the way we take raw data (like numbers, facts, or information) and turn it into something useful, like a report or an answer. It helps us organize, sort, and understand the data better.

Understanding Data Processing and Its Different Types

Types of Data Processing:

- Manual Data Processing:

- This is when people process data by hand, like writing things down on paper or doing math on a calculator.

- Example: Doing math homework without a computer.

- Mechanical Data Processing:

- This uses simple machines, like early calculators or typewriters, to help process data.

- Example: Using a basic adding machine to do math.

- Electronic Data Processing:

- This is when computers and software are used to process data quickly and accurately.

- Example: Using a computer to calculate grades in a school.

- Real-time Data Processing:

- Data is processed immediately as it happens.

- Example: Watching live sports scores online.

- Batch Data Processing:

- Data is collected and processed all at once, instead of right away.

- Example: Doing everyone's school grades at the end of the semester.

- Distributed Data Processing:

- This is when data is processed by multiple computers working together.

- Example: Using cloud storage where data is stored and processed on many different computers.

- Online Data Processing (OLTP):

- Data is processed as soon as it's entered into a system, like when you buy something online.

- Example: Making an online purchase where your payment is processed right away.

What is Data Processing: Methods of Data Processing

There are three main ways to process data: manual, mechanical, and electronic.

Manual Data Processing

Manual data processing is done completely by hand. People collect, filter, sort, and calculate the data without using any machines or software. It’s a low-cost method that doesn’t need special tools, but it has some downsides. It can lead to a lot of mistakes, take a lot of time, and require a lot of work from people.

Mechanical Data Processing

In mechanical data processing, simple machines and devices are used to help process the data. These could include things like calculators, typewriters, or printing presses. This method has fewer mistakes than manual processing but can still be slow and complicated when there’s a lot of data.

Electronic Data Processing

This is the most modern way to process data, using computers and software programs. Instructions are given to the software to process the data and create results. Although it’s the most expensive method, it’s also the fastest and most accurate, making it the best option for large amounts of data.

Examples of Data Processing

Data processing happens all around us every day, even if we don’t notice it. Here are a few real-life examples:

- A stock trading software turns millions of pieces of stock data into a simple graph.

- An online store looks at what you’ve searched for before to recommend similar products.

- A digital marketing company uses information about people’s locations to create ads for certain areas.

- A self-driving car uses data from sensors to spot pedestrians and other cars on the road.

Moving From Data Processing to Analytics

One of the biggest changes in today’s business world is the rise of big data. Although managing all this data can be tough, the benefits are huge. To stay competitive, companies need to have a good data processing plan.

After data is processed, the next step is analytics. Analytics is when you find patterns in the data and understand what they mean. While data processing changes the data into a usable format, analytics helps us make sense of it.

But no matter what process data scientists are using, the huge amount of data and the need to understand it means we need better ways to store and access all that information. This leads us to the next part!

How to obtain Bigdata certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

The future of data processing can be summed up in one short phrase: cloud computing.

While the six steps of data processing stay the same, cloud technology has made big improvements in how we process data. It has given data analysts and scientists the fastest, most advanced, cost-effective, and efficient ways to handle data. So, the same technology that helped create big data and the challenges of handling it also gives us the solution. The cloud can handle the large amounts of data that are a part of big data.

Contact Us For More Information:

Visit :www.icertglobal.com Email :

Read More

Every time you use the internet to learn about something, make an online payment, order food, or do anything else, data is created. Social media, online shopping, and streaming videos have all contributed to a huge increase in the amount of data we generate. To make sense of all this data, we use something called data processing. Let’s explore what data processing is and how it works.

What Is Data Processing?

Raw data, or data in its unorganized form, isn’t very helpful to anyone. Data processing is the process of turning this raw data into useful information. This is done in a series of steps by a team of people, like data scientists and engineers, who work together in a company. First, the raw data is collected. Then, it’s filtered, sorted, analyzed, and stored before being shown in an easy-to-understand format.

Data processing is very important for businesses because it helps them make better decisions and stay ahead of the competition. When the data is turned into charts, graphs, or reports, people in the company can easily understand and use it.

Now that we know what data processing is, let’s look at how the data processing cycle works.

Step 1: Collection

The first step in the data processing cycle is collecting raw data. The type of data you gather is really important because it affects the final results. It’s important to get data from reliable and accurate sources so the results are correct and useful. Raw data can include things like money numbers, website information, company profit or loss, and user activity.

Step 2: Preparation

Next comes data preparation, also known as data cleaning. This is when the raw data is sorted and checked to remove mistakes or unnecessary information. The data is checked for errors, duplicates, missing details, or wrong information. The goal is to make sure the data is in the best possible shape for the next steps. By cleaning up the data, we get rid of anything that could mess up the final results, ensuring that only good quality data is used.

Step 3: Input

Once the data is ready, it has to be turned into a format that computers can understand. This is called the input step. The data can be entered into the computer using a keyboard, scanner, or other tools that send the data to the system.

Step 4: Data Processing

This step is when the actual work happens. The raw data is processed using different methods like machine learning or artificial intelligence (AI) to turn it into useful information. Depending on where the data is coming from (like databases or connected devices) and what it will be used for, the process might look a little different.

Step 5: Output

After processing, the data is shown to the user in an easy-to-understand form, like graphs, tables, videos, documents, or even sound. This output can be saved and used later in another round of data processing if needed.

Step 6: Storage

The final step is storing the data. In this step, the processed data is saved in a place where it can be quickly accessed later. This storage also makes it easy to use the data again in the next data processing cycle.

Now that we understand data processing and its steps, let's take a look at the different types of data processing.

Data Processing is the way we take raw data (like numbers, facts, or information) and turn it into something useful, like a report or an answer. It helps us organize, sort, and understand the data better.

Understanding Data Processing and Its Different Types

Types of Data Processing:

- Manual Data Processing:

- This is when people process data by hand, like writing things down on paper or doing math on a calculator.

- Example: Doing math homework without a computer.

- Mechanical Data Processing:

- This uses simple machines, like early calculators or typewriters, to help process data.

- Example: Using a basic adding machine to do math.

- Electronic Data Processing:

- This is when computers and software are used to process data quickly and accurately.

- Example: Using a computer to calculate grades in a school.

- Real-time Data Processing:

- Data is processed immediately as it happens.

- Example: Watching live sports scores online.

- Batch Data Processing:

- Data is collected and processed all at once, instead of right away.

- Example: Doing everyone's school grades at the end of the semester.

- Distributed Data Processing:

- This is when data is processed by multiple computers working together.

- Example: Using cloud storage where data is stored and processed on many different computers.

- Online Data Processing (OLTP):

- Data is processed as soon as it's entered into a system, like when you buy something online.

- Example: Making an online purchase where your payment is processed right away.

What is Data Processing: Methods of Data Processing

There are three main ways to process data: manual, mechanical, and electronic.

Manual Data Processing

Manual data processing is done completely by hand. People collect, filter, sort, and calculate the data without using any machines or software. It’s a low-cost method that doesn’t need special tools, but it has some downsides. It can lead to a lot of mistakes, take a lot of time, and require a lot of work from people.

Mechanical Data Processing

In mechanical data processing, simple machines and devices are used to help process the data. These could include things like calculators, typewriters, or printing presses. This method has fewer mistakes than manual processing but can still be slow and complicated when there’s a lot of data.

Electronic Data Processing

This is the most modern way to process data, using computers and software programs. Instructions are given to the software to process the data and create results. Although it’s the most expensive method, it’s also the fastest and most accurate, making it the best option for large amounts of data.

Examples of Data Processing

Data processing happens all around us every day, even if we don’t notice it. Here are a few real-life examples:

- A stock trading software turns millions of pieces of stock data into a simple graph.

- An online store looks at what you’ve searched for before to recommend similar products.

- A digital marketing company uses information about people’s locations to create ads for certain areas.

- A self-driving car uses data from sensors to spot pedestrians and other cars on the road.

Moving From Data Processing to Analytics

One of the biggest changes in today’s business world is the rise of big data. Although managing all this data can be tough, the benefits are huge. To stay competitive, companies need to have a good data processing plan.

After data is processed, the next step is analytics. Analytics is when you find patterns in the data and understand what they mean. While data processing changes the data into a usable format, analytics helps us make sense of it.

But no matter what process data scientists are using, the huge amount of data and the need to understand it means we need better ways to store and access all that information. This leads us to the next part!

How to obtain Bigdata certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

The future of data processing can be summed up in one short phrase: cloud computing.

While the six steps of data processing stay the same, cloud technology has made big improvements in how we process data. It has given data analysts and scientists the fastest, most advanced, cost-effective, and efficient ways to handle data. So, the same technology that helped create big data and the challenges of handling it also gives us the solution. The cloud can handle the large amounts of data that are a part of big data.

Contact Us For More Information:

Visit :www.icertglobal.com Email :

A Deep Dive into the Product Owners Key Responsibilities

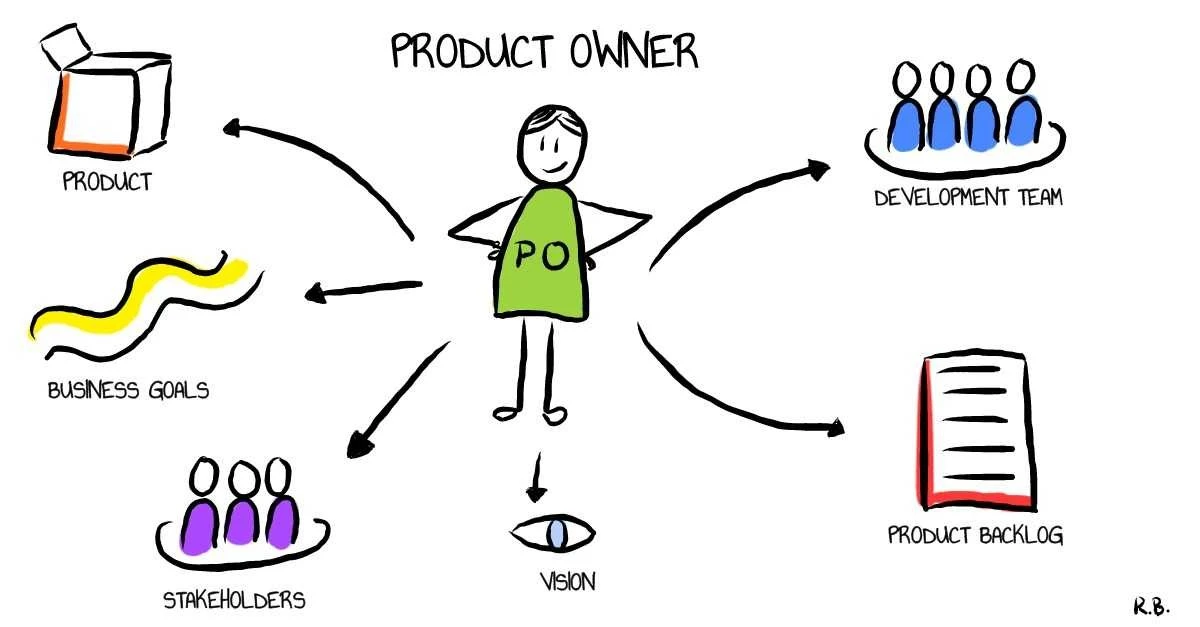

A Product Owner has the responsibility to ensure a project is successful in Scrum. They work with the "product backlog." This is a list of tasks needed to improve or finish the product. This ensures that the product contains the greatest value for customers. Scrum is part of Agile. It helps teams communicate and collaborate better.

The Product Owner is a key member of the Scrum team. Their main job is to outline what the product should be and create the product backlog. They are the go-to person for the development team. They share what features the product needs based on customer requests. The Product Owner makes sure the development team knows what is most important to work on. They also resolve any questions that the team may have regarding what must be put in the product. The Product Owner makes sure the product being developed gives great value to its users.

What Does a Product Owner Do ?

A Product Owner decides what a product should be and how it works. They base these decisions on the needs of customers and key people, known as stakeholders. They collect data from research to determine what features are most essential.

The Product Owner creates a list called the "product backlog." This list includes all the required features and tasks for the product. They also prioritize the items on this list. They keep this list updated. They change it based on customer feedback and changing business needs. The Product Owner works closely with developers, designers, and marketers. They make sure the product is on time, meets customer needs, and stays within budget.

Product Owner Roles

The main job of a Product Owner is to make sure that product development creates the most value for the company. This means working closely with the development team. We want to make sure the product meets the right specifications and is finished on time.The Product Owner manages the product backlog.

This is a list of tasks the team needs to complete. Here's what they do with the backlog:

•Ensure the backlog is well-defined and everything written clearly.

•Prioritize activities such that the high-priority tasks are executed first.

•Ensure the work meets the customer's expectations and goals.

•Constant feedback to the development team.

• Ensure that all the team members know what is to be done.

Product Owner Skills

Some of the most important skills a Product Owner must possess are:

• Domain Knowledge: The Product Owner needs to know the field and how users will use the product.

• Leadership and Communication: They must be able to communicate effectively with all the stakeholders and guide the team towards the product objectives.

• Optimizing Value: The Product Owner must make sure the product gives the most value to customers quickly.

•Reading Customer Needs: They must translate what the customer is looking for and ensure that the development team is aware of these needs.

Product Owner's Responsibilities

•Product Backlog: The Product Owner maintains and creates the product backlog. The list must be prioritized according to importance and urgency. The backlog is frequently updated as the product evolves.

• Stages of Development: The Product Owner stays involved in product development. They update the team on any changes in customer needs or product goals. They join meetings to review progress and look for ways to improve.

•Serving as a Single Point of Contact: The Product Owner is the single point of contact for any inquiries regarding the product, ensuring everyone is aligned.

•Customer Goals Communication: The Product Owner must communicate the customer's requirements clearly to all the stakeholders in the project.

•Preempting Customer Needs: They should be capable of guessing what the customer will require next, even before the customer himself asks for it, looking at market dynamics and the customer journey.

•Progress Evaluation: The Product Owner reviews every step of the product development process and provides feedback on how it can be better.

Product Owner Skills

You must possess a combination of skills to be a successful Product Owner that will guide you in managing a product from the conception phase right up until it's released to customers.

Some of the key skills you should possess as a Product Owner include:

•Product Management

The Product Owner must be able to determine what features and requirements are most vital for the product. They must also know what customers need and identify opportunities in the market for new product concepts.

• Agile Development

A Product Owner must know how Agile development is done. This involves practices such as Scrum, Kanban, and Lean. Knowing these practices will enable the Product Owner to prioritize the product backlog (the things to do list), schedule reviews, and cooperate with the development team.

Product Owner Stances

A Product Owner plays many key roles to help ensure a successful product. Six significant stances (or roles) a Product Owner can take are as follows:

1. Visionary

•The Product Owner develops and communicates a clear product vision that aligns with the company's objectives.

•They generate new product ideas and ensure everyone is aware of and believes in these ideas.

• They prioritize both short- and long-term objectives, deciding what will benefit the product and the organization down the road.

2. Collaborator

- The Product Owner teams up with developers, designers, and marketers. This way, they make sure the product is built right and delivered on schedule.

• They ensure that the team communicates effectively, fostering trust and collaboration among members.

• They involve everyone in sharing ideas and giving feedback, which helps the product.

3. Customer Representative

• The Product Owner represents the customer. They make sure the customer's needs and expectations are part of the product plan.

• They understand customer needs and wants. They use this info to guide the product.

•They seek customers' feedback and others' viewpoints to constantly improve the product.

4. Decision Maker

• The Product Owner makes key decisions about the product plan, what to build next, and how to use resources.

• They use data and feedback to make smart choices. They also check how the product is doing.

•They mediate conflicting requirements from customers, the team, and other parties.

5. Experimenter

• Product Owner encourages the team to try and test ideas to find what works best.

•They employ data and feedback to assist with decision-making and product improvement.

•They facilitate the team to test concepts and learn from the outcomes.

• They update the product plan based on findings from experiments and user tests.

6. Influencer

• The Product Owner builds strong ties with stakeholders and the development team. This helps gain support for the product vision.

•They articulate the vision of the product in a manner that inspires and excites others.

• They negotiate and collaborate with various groups to come up with solutions that work for all and are in line with the goals of the product.

Difference Between a Scrum Master and a Product Owner

The biggest difference between a Scrum Master and a Product Owner is how they collaborate with the team and the stakeholders (the individuals who are interested in the project).

•A Scrum Master is someone who is proficient in Agile approaches, which is a style of working that allows teams to make progress step by step. The Scrum Master ensures the team adheres to these approaches and communicates effectively.

•A Product Owner is the one who determines what features the product must have. They are responsible for ensuring the product fulfills customer requirements and remains in accordance with the business objectives

How a Product Owner Interacts with the Scrum Team

A Product Owner works with the Scrum team in many ways. This helps the team deliver a successful product.

1. Assisting in defining and elaborating on the product backlog:

The Product Owner collaborates with the team to determine what should be accomplished and in what priority.

2. Giving feedback during Sprint reviews:

The Product Owner checks the team's work during reviews. They also suggest improvements for the next steps.

3. Clarifying questions in Sprint planning and daily meetings:

The Product Owner is there to help explain questions and offer the team correct information.

4. Ensuring that the team understands the product vision and goals:

The Product Owner shares the bigger picture and keeps the team informed of what the product should be doing.

Why Does a Scrum Team Require a Product Owner?

A Product Owner has a vital role in leading the Scrum team:

1. Defining and prioritizing the product backlog:

The Product Owner determines what the team should do first and what's most valuable for the product.

2. Ensuring the team works on the most valuable features:

The Product Owner ensures the team works on features that customers will adore and that will make the business successful.

3. Serving as the contact point for stakeholders:

The Product Owner interfaces with stakeholders (such as customers or business managers) and ensures their requirements are included in the product.

4. Deciding and leading the team:

The Product Owner decides what is to be done and ensures the team remains on track to their objectives.

5. Keeping the product roadmap:

The Product Owner refines the product plan, ensuring it keeps pace with evolving customer requirements and shifts in the market

How Is a Product Owner Different from a Scrum Master or Project Manager?

There are three principal roles in Scrum, and each plays distinct responsibilities:

• Product Owner:

The Product Owner is responsible for specifying what the product requires, prioritizing which features are most crucial, and ensuring the product is aligned with customer requirements and business objectives.

• Scrum Master:

The Scrum Master ensures the team is using the Scrum process accurately. They assist in clearing away impediments and promoting collaboration to produce quality work.

• Project Manager:

A Project Manager oversees the whole project, such as budget, timeline, and risks. They ensure the project is completed on schedule and in the budgeted cost.

How to obtain Big Data certification?

We are an Education Technology company providing certification training courses to accelerate careers of working professionals worldwide. We impart training through instructor-led classroom workshops, instructor-led live virtual training sessions, and self-paced e-learning courses.

We have successfully conducted training sessions in 108 countries across the globe and enabled thousands of working professionals to enhance the scope of their careers.

Our enterprise training portfolio includes in-demand and globally recognized certification training courses in Project Management, Quality Management, Business Analysis, IT Service Management, Agile and Scrum, Cyber Security, Data Science, and Emerging Technologies. Download our Enterprise Training Catalog from https://www.icertglobal.com/corporate-training-for-enterprises.php and https://www.icertglobal.com/index.php

Popular Courses include:

-

Project Management: PMP, CAPM ,PMI RMP

-

Quality Management: Six Sigma Black Belt ,Lean Six Sigma Green Belt, Lean Management, Minitab,CMMI

-

Business Analysis: CBAP, CCBA, ECBA

-

Agile Training: PMI-ACP , CSM , CSPO

-

Scrum Training: CSM

-

DevOps

-

Program Management: PgMP

-

Cloud Technology: Exin Cloud Computing

-

Citrix Client Adminisration: Citrix Cloud Administration

The 10 top-paying certifications to target in 2025 are:

Conclusion

Become a Product Owner Today! To become a great Product Owner, you must understand the business and industry, comprehend customer needs, and be able to convert that into product requirements. A Product Owner plays a crucial role in providing quality products that satisfy customer requirements and contribute to the value of the company. If you want to enhance your skills, iCert Global provides Certified ScrumMaster® (CSM) and Certified Scrum Product Owner® (CSPO) courses, which can assist you in getting certified and advancing your career!

Contact Us For More Information:

Visit :www.icertglobal.com Email :

Read More

A Product Owner has the responsibility to ensure a project is successful in Scrum. They work with the "product backlog." This is a list of tasks needed to improve or finish the product. This ensures that the product contains the greatest value for customers. Scrum is part of Agile. It helps teams communicate and collaborate better.

The Product Owner is a key member of the Scrum team. Their main job is to outline what the product should be and create the product backlog. They are the go-to person for the development team. They share what features the product needs based on customer requests. The Product Owner makes sure the development team knows what is most important to work on. They also resolve any questions that the team may have regarding what must be put in the product. The Product Owner makes sure the product being developed gives great value to its users.

What Does a Product Owner Do ?

A Product Owner decides what a product should be and how it works. They base these decisions on the needs of customers and key people, known as stakeholders. They collect data from research to determine what features are most essential.

The Product Owner creates a list called the "product backlog." This list includes all the required features and tasks for the product. They also prioritize the items on this list. They keep this list updated. They change it based on customer feedback and changing business needs. The Product Owner works closely with developers, designers, and marketers. They make sure the product is on time, meets customer needs, and stays within budget.

Product Owner Roles

The main job of a Product Owner is to make sure that product development creates the most value for the company. This means working closely with the development team. We want to make sure the product meets the right specifications and is finished on time.The Product Owner manages the product backlog.

This is a list of tasks the team needs to complete. Here's what they do with the backlog:

•Ensure the backlog is well-defined and everything written clearly.

•Prioritize activities such that the high-priority tasks are executed first.

•Ensure the work meets the customer's expectations and goals.

•Constant feedback to the development team.

• Ensure that all the team members know what is to be done.

Product Owner Skills

Some of the most important skills a Product Owner must possess are:

• Domain Knowledge: The Product Owner needs to know the field and how users will use the product.

• Leadership and Communication: They must be able to communicate effectively with all the stakeholders and guide the team towards the product objectives.

• Optimizing Value: The Product Owner must make sure the product gives the most value to customers quickly.

•Reading Customer Needs: They must translate what the customer is looking for and ensure that the development team is aware of these needs.

Product Owner's Responsibilities