Quick Enquiry Form

Categories

- Agile and Scrum (187)

- BigData (21)

- Business Analysis (87)

- Cirtix Client Administration (51)

- Cisco (59)

- Cloud Technology (71)

- Cyber Security (41)

- Data Science and Business Intelligence (35)

- Developement Courses (46)

- DevOps (15)

- Digital Marketing (50)

- Emerging Technology (172)

- IT Service Management (70)

- Microsoft (52)

- Other (393)

- Project Management (471)

- Quality Management (127)

- salesforce (62)

Latest posts

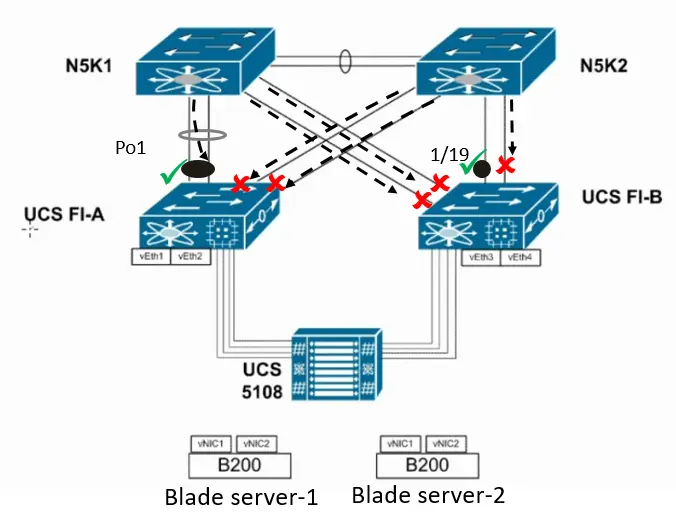

Mastering Cisco UCS Management The..

CCNP Ultimate Path to Networking..

Understanding CISS Key Concepts and..

Free Resources

Subscribe to Newsletter

The Benefits of Implementing RPA in Your Business

In today's fast-paced and highly competitive business landscape, organizations are constantly seeking innovative ways to enhance their operations, reduce costs, and drive growth. Robotic Process Automation (RPA) has emerged as a transformative solution, offering a plethora of benefits that can revolutionize the way businesses operate.

RPA is more than just a buzzword; it's a game-changer. It leverages the capabilities of software robots to automate repetitive, rule-based tasks that were once performed by humans. From data entry and invoicing to customer support and HR processes, RPA is redefining the modern business landscape in a profound way.

In this blog post, we'll explore the incredible advantages of implementing RPA in your business. We'll delve into the ways it can boost productivity, cut operational costs, and empower your employees to focus on more strategic, value-added tasks. We'll also discuss how RPA enables your organization to adapt to ever-changing workloads and market demands while ensuring compliance and enhancing customer satisfaction.

Join us on this journey to uncover the true potential of RPA and learn how it can be a key driver of success in your business.Let's embark on this automation adventure together.

Table of contents

-

Increased Productivity: How RPA Streamlines Repetitive Tasks

-

Cost Savings: The Financial Benefits of RPA Implementation

-

Error Reduction: Enhancing Accuracy Through Automation

-

24/7 Operations: The Advantage of RPA in Workflow Continuity

-

Scalability: Adapting Your Business to Changing Workloads with RPA

-

Employee Satisfaction: Empowering Workers with RPA

-

Data Insights: Leveraging RPA for Improved Decision-Making

-

Customer Experience Improvement: RPA's Impact on Service Quality

-

Compliance and Audit Readiness: Meeting Regulatory Requirements with RPA

-

Competitive Advantage: Staying Ahead in Your Industry with RPA

-

Conclusion

Increased Productivity: How RPA Streamlines Repetitive Tasks

In the relentless pursuit of operational excellence, businesses are increasingly turning to Robotic Process Automation (RPA) to revolutionize the way they handle repetitive and time-consuming tasks. Imagine a world where your workforce is liberated from mundane, repetitive chores, and your business can redirect its human talent towards creative and strategic endeavors. That's the promise of RPA, and in this article, we'll explore how it supercharges productivity by streamlining those monotonous tasks.

Automating the Mundane: RPA is the unsung hero of the business world. It excels at handling rule-based, repetitive tasks with precision and consistency. From data entry and report generation to invoice processing and email sorting, RPA tirelessly executes these activities, freeing up your human workforce for more meaningful, challenging, and engaging work.

24/7 Operations: Unlike human employees, RPA bots don't need breaks, sleep, or holidays. They work around the clock, ensuring that critical processes continue without interruption. This 24/7 availability not only accelerates task completion but also enhances customer service and response times.

Error Elimination: Human errors can be costly in terms of both time and money. RPA reduces the risk of errors to near zero. Bots follow predefined rules meticulously, resulting in accurate and consistent outcomes. This not only saves resources but also enhances the quality of your operations.

Scalability: As your business grows, so does the volume of repetitive tasks. RPA scales effortlessly, accommodating increased workloads without the need for lengthy recruitment and training processes. It's a flexible solution that grows with your business, allowing you to adapt to changing demands seamlessly.

Rapid Task Execution: With RPA, tasks that would take hours or days to complete manually can be executed in seconds or minutes. This expedited task execution enables your business to respond quickly to customer needs, market shifts, and other time-sensitive factors.

Enhanced Employee Satisfaction: Imagine your employees no longer bogged down by mind-numbing, repetitive work. RPA liberates your workforce from the most tedious aspects of their jobs, leading to increased job satisfaction and the ability to focus on tasks that require human creativity, decision-making, and critical thinking.

Cost Savings: The Financial Benefits of RPA Implementation

In an era where cost efficiency is paramount for businesses of all sizes, Robotic Process Automation (RPA) stands out as a formidable ally. It's not just about automation for the sake of it; it's about achieving substantial financial benefits through intelligent, rule-based processes. In this article, we'll explore how RPA can translate into real cost savings for your organization.

Reduced Labor Costs: The most immediate and noticeable cost savings with RPA come from a decreased reliance on human labor for repetitive tasks. RPA bots work tirelessly, without breaks or holidays, and do not require salaries, benefits, or overtime pay. This not only reduces labor costs but also eliminates the need for temporary staff during peak workloads.

Error Minimization: Human errors can be costly, leading to rework, customer dissatisfaction, and sometimes even regulatory fines. RPA dramatically reduces the risk of errors, ensuring that tasks are executed with a high degree of accuracy. This, in turn, mitigates the costs associated with correcting mistakes.

Improved Efficiency: RPA streamlines processes, making them more efficient and faster. Tasks that once took hours or days to complete manually can be accomplished in a fraction of the time with RPA. This increased efficiency allows your workforce to focus on higher-value tasks and revenue-generating activities.

Scalability Without Additional Costs: As your business grows, you may need to handle more transactions or process more data. Scaling up with human employees can be expensive, involving recruitment, training, and office space costs. RPA, on the other hand, scales easily without incurring additional costs.

Reduced Operational Costs: RPA can optimize various operational costs. It can help in inventory management, supply chain optimization, and other processes, reducing costs associated with excess inventory, carrying costs, and logistics.

Energy and Resource Savings: Automation doesn't just save labor costs; it can also lead to reduced energy consumption. RPA bots run on servers and data centers, which can be more energy-efficient than maintaining large office spaces with numerous employees.

the financial benefits of RPA implementation are substantial. It's not just about cutting costs; it's about doing so while improving operational efficiency, reducing errors, and allowing your employees to focus on strategic, value-adding tasks. RPA isn't an expense; it's an investment that pays off by delivering significant cost savings and contributing to the overall financial health of your organization. It's time to embrace RPA as a key driver of fiscal prudence and financial success.

Error Reduction: Enhancing Accuracy Through Automation

In the world of business, accuracy is not a mere aspiration; it's a prerequisite for success. Mistakes can be costly, resulting in financial losses, customer dissatisfaction, and even reputational damage. Fortunately, automation, specifically Robotic Process Automation (RPA), has emerged as a powerful tool for enhancing accuracy by minimizing errors. In this article, we'll explore how RPA's precision transforms the operational landscape of businesses.

Perfect Consistency: RPA bots are meticulously programmed to follow predefined rules and instructions. They execute tasks with unwavering consistency, ensuring that the same standard is upheld for every transaction, every time. This perfect consistency is a stark contrast to human work, which can be influenced by factors like fatigue, distractions, or oversight.

Reduction in Human Error: Human errors, no matter how diligent the employees, are an inherent part of manual processes. RPA mitigates this risk by automating rule-based tasks. Whether it's data entry, order processing, or calculations, RPA eliminates the potential for common errors such as typos, miscalculations, and missed steps.

Elimination of Repetitive Mistakes: Over time, repetitive tasks can become mundane, leading to inattentiveness and a higher likelihood of errors. RPA doesn't suffer from such lapses in attention. It tirelessly performs tasks with precision, without being influenced by factors that can lead to mistakes.

Error Monitoring and Reporting: RPA systems are equipped with error monitoring and reporting capabilities. If an issue arises, the system can detect it quickly and often correct it automatically. In cases where human intervention is required, the error is flagged for attention, reducing the chances of unnoticed errors that can compound over time.

24/7 Operations: The Advantage of RPA in Workflow Continuity

In a globalized and interconnected world, business operations are expected to run seamlessly around the clock. However, the human workforce has its limitations, including the need for rest and downtime. This is where Robotic Process Automation (RPA) steps in, offering a substantial advantage in workflow continuity through its ability to operate 24/7. In this article, we will delve into how RPA empowers businesses to break free from the constraints of traditional working hours.

Non-Stop Productivity: One of the most compelling advantages of RPA is its capacity for non-stop, 24/7 operations. RPA bots are not bound by the constraints of the human workday, allowing tasks to be executed continuously, even during evenings, weekends, and holidays. This round-the-clock productivity enhances the efficiency of critical business processes.

Reduced Response Times: With 24/7 RPA, customer inquiries, orders, and requests can be addressed instantly. This reduction in response times not only enhances customer satisfaction but can also give your business a competitive edge in industries where responsiveness is crucial.

No Overtime or Shift Work: Employing human workers for continuous operations typically involves overtime pay, shift differentials, and the associated costs of additional personnel management. RPA eliminates these costs while maintaining consistent, uninterrupted operations.

High Availability: RPA systems are designed for high availability. They can be configured to run on redundant servers or in the cloud, ensuring that operations continue even in the event of technical failures. This minimizes downtime and ensures uninterrupted workflow.

Enhanced Operational Efficiency: 24/7 RPA doesn't just mean working more hours; it means working more efficiently. Tasks are executed consistently and without the fluctuations in performance that can occur during late shifts or overnight hours.

The advantage of RPA in enabling 24/7 operations is a transformative element in the modern business landscape. It ensures that critical processes continue seamlessly, improves responsiveness, and reduces the costs associated with shift work and downtime. RPA's ability to work tirelessly and without interruption is a crucial factor in maintaining workflow continuity and meeting the demands of a 24/7 global economy.

Scalability: Adapting Your Business to Changing Workloads with RPA

In the dynamic and ever-evolving world of business, the ability to adapt to changing workloads is paramount for success. Robotic Process Automation (RPA) emerges as a pivotal solution, offering businesses the flexibility to scale their operations efficiently. In this article, we'll explore how RPA empowers organizations to seamlessly adjust to fluctuating demands, ensuring agility and sustained growth.

Handling Workload Peaks: Workload fluctuations are a common challenge for businesses. Seasonal spikes, promotions, or unforeseen events can cause a sudden surge in operational demands. RPA's scalability enables organizations to effortlessly address these peaks without the need for extensive human resource adjustments.

Speed and Accuracy: RPA bots can handle tasks with exceptional speed and accuracy. This not only ensures that tasks are completed on time during high-demand periods but also minimizes the risk of errors, contributing to a smoother scaling process.

Continuous Operation: RPA operates 24/7, providing continuous support for scaling efforts. Whether your business operates in multiple time zones or faces constant demand, RPA ensures that the scalability process can be ongoing and uninterrupted.

Improved Resource Allocation: The scalability offered by RPA allows human employees to focus on tasks that require creativity, decision-making, and critical thinking. This improved resource allocation not only enhances the quality of work but also promotes employee job satisfaction.

Rapid Deployment: Deploying additional RPA bots or reconfiguring existing ones can be achieved quickly. This agility is particularly valuable when responding to unexpected changes in workload, such as market fluctuations or emerging business opportunities.

Scalability Planning: RPA's analytics and data-driven insights can assist in proactive scalability planning. By analyzing historical data, businesses can anticipate workload fluctuations and adjust their RPA deployments accordingly.

The scalability that RPA offers is a strategic asset for businesses looking to adapt to changing workloads and seize growth opportunities. Whether you're aiming to respond to seasonal variations, sudden market shifts, or simply improve the efficiency of your daily operations, RPA provides a scalable solution that optimizes your resources and ensures that your business can remain agile and competitive in an ever-changing business landscape.

Employee Satisfaction: Empowering Workers with RPA

In the quest for business success, employee satisfaction is a critical factor that should never be underestimated. Satisfied and empowered employees are more productive, creative, and loyal to their organizations. Robotic Process Automation (RPA) plays a vital role in achieving these goals by relieving employees of mundane, repetitive tasks and giving them the opportunity to focus on higher-value, more fulfilling work. In this article, we'll explore how RPA empowers workers, leading to greater job satisfaction and overall success.

Skill Development: Employees empowered by RPA have the opportunity to develop new skills. As they transition to more complex, strategic roles, they can acquire valuable competencies that benefit both their personal growth and the organization.

Increased Job Satisfaction: By eliminating the least satisfying aspects of a job, RPA contributes to higher job satisfaction. Employees who find their work engaging and fulfilling are more likely to be committed to their roles and remain with the company for the long term.

Work-Life Balance: RPA's ability to handle tasks 24/7 ensures that employees are not burdened with late-night or weekend work to meet deadlines. This supports a healthier work-life balance, reducing stress and enhancing overall well-being.

Employee Empowerment: Employees often feel more empowered and valued when their employers invest in technologies like RPA to improve the work environment. This empowerment can boost morale and motivation.

Fostering Innovation: Employees freed from routine tasks can focus on more innovative and creative work. This not only benefits the company by driving innovation but also leads to a more fulfilling work experience for employees.

Consistency and Quality: RPA's ability to perform tasks with a high degree of accuracy ensures that employees can rely on consistent and error-free results in their work. This consistency reduces frustration and the need for rework.

Employee Feedback: RPA can be used to gather and process employee feedback more efficiently. By automating the data collection and analysis, it allows management to respond more effectively to concerns and suggestions, further boosting employee satisfaction.

RPA is a powerful tool for empowering employees and enhancing their job satisfaction. By automating repetitive tasks, it frees up time for more meaningful and challenging work, allows for skill development, and fosters a positive work environment. This not only benefits individual employees but also contributes to the overall success and competitiveness of the organization. It's a win-win scenario where both employees and the business thrive.

Data Insights: Leveraging RPA for Improved Decision-Making

In today's data-driven business landscape, making informed decisions is paramount for success. Fortunately, Robotic Process Automation (RPA) goes beyond task automation – it's a powerful tool for unlocking valuable data insights that can transform the way organizations make decisions. In this article, we'll explore how RPA can be harnessed to collect, process, and leverage data for more informed and strategic decision-making.

Data Collection and Aggregation: RPA can collect and aggregate data from various sources, including databases, spreadsheets, and online platforms. By automating the data collection process, it ensures data integrity and reduces the risk of human errors.

Real-Time Data Processing: RPA can process data in real-time, providing up-to-the-minute information that's crucial for making timely decisions. This real-time processing is especially valuable in fast-paced industries and competitive markets.

Data Cleansing and Quality Assurance: RPA can be programmed to cleanse and validate data, ensuring that it's accurate and reliable. This step is essential for high-quality decision-making, as inaccurate data can lead to poor judgments.

Predictive Analytics: RPA can be combined with predictive analytics models to forecast trends, identify potential issues, and recommend actions. This empowers decision-makers to proactively address challenges and seize opportunities.

Customized Reports and Dashboards: RPA can generate customized reports and dashboards that present data in a clear and actionable format. Decision-makers can quickly access the information they need, facilitating faster and more informed choices.

Exception Handling: RPA can identify exceptions or anomalies in data. When exceptions occur, RPA can alert decision-makers, enabling them to investigate and respond promptly to issues.

Compliance and Audit Trail: RPA ensures that tasks are executed according to predefined rules, creating a robust audit trail. This audit trail is invaluable for compliance with regulatory requirements and is a valuable resource for decision-making during audits.

RPA is not just about automating tasks; it's a key enabler of data-driven decision-making. By collecting, processing, and providing valuable data insights, RPA equips organizations to make informed, strategic decisions that can drive growth, efficiency, and competitive advantage. The ability to harness the power of data is a transformative asset in the modern business world, and RPA plays a central role in unlocking its potential.

Customer Experience Improvement: RPA's Impact on Service Quality

In an era where customer satisfaction can make or break a business, delivering an exceptional customer experience is a top priority. Robotic Process Automation (RPA) has emerged as a powerful tool for improving service quality and ensuring a seamless, satisfying customer journey. In this article, we'll explore how RPA positively impacts the customer experience and contributes to the success of businesses.

Faster Response Times: RPA can respond to customer inquiries and requests in real-time. This immediate response ensures that customers don't have to wait, enhancing their perception of your service quality.

Error Reduction: RPA minimizes errors in tasks such as order processing and data entry. Fewer mistakes mean fewer issues for customers to deal with, resulting in a smoother, more reliable experience.

24/7 Availability: RPA operates around the clock, ensuring that customers can interact with your business at any time. This high availability caters to diverse schedules and time zones, providing a more customer-centric experience.

Enhanced Data Security: RPA's robust security measures protect customer data and sensitive information. By safeguarding their data, you build trust and confidence, further improving the customer experience.

Proactive Issue Resolution: RPA can monitor systems for issues and exceptions in real-time. When issues arise, RPA can alert human staff to take corrective action promptly, minimizing customer disruption.

RPA is a game-changer in improving the customer experience. By reducing response times, minimizing errors, ensuring availability, and enhancing data security, RPA contributes to a higher level of customer satisfaction. Businesses that leverage RPA for service quality enhancements not only retain loyal customers but also gain a competitive edge in an environment where customer experience is a defining factor in success. RPA isn't just about efficiency; it's about enhancing the human touch in customer service and ensuring that customers receive the best service possible.

Compliance and Audit Readiness: Meeting Regulatory Requirements with RPA

In today's highly regulated business environment, compliance with industry-specific and government-mandated regulations is a non-negotiable aspect of operation. The failure to meet regulatory requirements can lead to significant legal consequences, fines, and damage to a company's reputation. Robotic Process Automation (RPA) offers a powerful solution for ensuring compliance and audit readiness. In this article, we'll explore how RPA can help businesses meet regulatory requirements and prepare for audits effectively.

Rule-Based Consistency: RPA excels at executing tasks following predefined rules and standards. This inherent consistency ensures that business processes are executed in a compliant manner every time.

Automated Data Logging: RPA can automatically record and log all actions taken during its processes. This comprehensive data logging provides a transparent audit trail, ensuring that regulators and auditors can easily review and verify compliance.

Real-Time Monitoring: RPA systems can monitor processes in real-time, identifying and rectifying deviations from compliance standards as they occur. This proactive approach minimizes the risk of non-compliance issues going unnoticed.

Data Security and Privacy: RPA is designed to protect sensitive data. By automating data handling and storage, it reduces the risk of data breaches and ensures that personally identifiable information (PII) and other sensitive data are handled in a compliant manner.

Regulatory Reporting: RPA can automate the collection and preparation of reports required for regulatory compliance. This not only reduces the time and effort required for reporting but also minimizes the risk of errors in these critical documents.

Controlled Access: RPA allows for controlled access to sensitive systems and data. By limiting access to authorized personnel and automating role-based permissions, it enhances security and compliance with access control regulations.

Reducing Human Error: Many compliance issues arise from human error. RPA minimizes these errors, which can lead to non-compliance, fines, and other penalties.

RPA is a vital tool for meeting regulatory requirements and achieving audit readiness. It not only protects businesses from legal consequences and fines but also allows them to focus on their core operations, knowing that they are operating within the boundaries of the law. RPA doesn't just streamline processes; it safeguards businesses and enhances their ability to navigate complex regulatory landscapes with confidence.

Competitive Advantage: Staying Ahead in Your Industry with RPA

In the fast-paced and ever-evolving world of business, staying ahead of the competition is a constant challenge. To gain a competitive edge, organizations must be agile, efficient, and innovative. Robotic Process Automation (RPA) offers a significant advantage by providing the tools to streamline operations, reduce costs, and make data-driven decisions. In this article, we'll explore how RPA can be a catalyst for achieving a competitive advantage in your industry.

Operational Efficiency: RPA optimizes business processes by automating repetitive and rule-based tasks. This increased efficiency enables your organization to deliver products and services more quickly and cost-effectively, giving you an edge in the market.

Cost Reduction: By automating tasks and minimizing errors, RPA reduces operational costs. This allows your business to allocate resources strategically, invest in growth, and potentially offer competitive pricing to customers.

Customer Satisfaction: RPA enhances the customer experience by ensuring faster response times, personalized interactions, and error-free service. Satisfied customers are more likely to remain loyal and recommend your business to others.

Real-Time Data Insights: RPA collects and processes data in real-time, providing valuable insights into market trends, customer behavior, and operational performance. These insights can be leveraged to make informed decisions and stay ahead of market shifts.

Innovation and Creativity: By automating routine tasks, RPA liberates your workforce from the mundane, allowing them to focus on creative, strategic, and value-added activities. This fosters a culture of innovation and provides a competitive advantage in product and service development.

Strategic Decision-Making: With RPA, your organization can make data-driven decisions more efficiently. This strategic approach to decision-making allows you to anticipate market trends, spot opportunities, and outmaneuver competitors.

RPA is a game-changer for businesses seeking to gain a competitive advantage in their industries. By increasing operational efficiency, reducing costs, enhancing customer satisfaction, and providing real-time insights, RPA empowers organizations to stay ahead in rapidly changing markets. It's not just about streamlining processes; it's about positioning your business to lead, innovate, and thrive in a highly competitive world. RPA is the key to not just keeping pace but setting the pace in your industry.

Conclusion

In conclusion, Robotic Process Automation (RPA) is a transformative force in the modern business landscape, offering a multitude of benefits that can revolutionize the way organizations operate. Throughout this series of articles, we've explored the diverse advantages of RPA and how it can be harnessed to enhance different aspects of business operations.

RPA's ability to operate 24/7 ensures workflow continuity, allowing businesses to adapt to fluctuating workloads, meet global demands, and maintain a competitive edge. It offers employees the opportunity to focus on more meaningful tasks, fostering job satisfaction, skill development, and innovation.

RPA leverages data insights to facilitate data-driven decision-making, enabling businesses to respond to market changes and opportunities with agility and precision. It improves the customer experience through faster response times, reduced errors, and personalized interactions, leading to higher customer satisfaction and loyalty.

In a world where efficiency, agility, and customer satisfaction are paramount, RPA is more than a tool for automation; it's a strategic asset that empowers businesses to thrive and excel. Embracing the automation revolution is not just a choice; it's a necessity for organizations that aim to remain competitive, innovative, and successful in the ever-evolving business landscape.

Read More

In today's fast-paced and highly competitive business landscape, organizations are constantly seeking innovative ways to enhance their operations, reduce costs, and drive growth. Robotic Process Automation (RPA) has emerged as a transformative solution, offering a plethora of benefits that can revolutionize the way businesses operate.

RPA is more than just a buzzword; it's a game-changer. It leverages the capabilities of software robots to automate repetitive, rule-based tasks that were once performed by humans. From data entry and invoicing to customer support and HR processes, RPA is redefining the modern business landscape in a profound way.

In this blog post, we'll explore the incredible advantages of implementing RPA in your business. We'll delve into the ways it can boost productivity, cut operational costs, and empower your employees to focus on more strategic, value-added tasks. We'll also discuss how RPA enables your organization to adapt to ever-changing workloads and market demands while ensuring compliance and enhancing customer satisfaction.

Join us on this journey to uncover the true potential of RPA and learn how it can be a key driver of success in your business.Let's embark on this automation adventure together.

Table of contents

-

Increased Productivity: How RPA Streamlines Repetitive Tasks

-

Cost Savings: The Financial Benefits of RPA Implementation

-

Error Reduction: Enhancing Accuracy Through Automation

-

24/7 Operations: The Advantage of RPA in Workflow Continuity

-

Scalability: Adapting Your Business to Changing Workloads with RPA

-

Employee Satisfaction: Empowering Workers with RPA

-

Data Insights: Leveraging RPA for Improved Decision-Making

-

Customer Experience Improvement: RPA's Impact on Service Quality

-

Compliance and Audit Readiness: Meeting Regulatory Requirements with RPA

-

Competitive Advantage: Staying Ahead in Your Industry with RPA

-

Conclusion

Increased Productivity: How RPA Streamlines Repetitive Tasks

In the relentless pursuit of operational excellence, businesses are increasingly turning to Robotic Process Automation (RPA) to revolutionize the way they handle repetitive and time-consuming tasks. Imagine a world where your workforce is liberated from mundane, repetitive chores, and your business can redirect its human talent towards creative and strategic endeavors. That's the promise of RPA, and in this article, we'll explore how it supercharges productivity by streamlining those monotonous tasks.

Automating the Mundane: RPA is the unsung hero of the business world. It excels at handling rule-based, repetitive tasks with precision and consistency. From data entry and report generation to invoice processing and email sorting, RPA tirelessly executes these activities, freeing up your human workforce for more meaningful, challenging, and engaging work.

24/7 Operations: Unlike human employees, RPA bots don't need breaks, sleep, or holidays. They work around the clock, ensuring that critical processes continue without interruption. This 24/7 availability not only accelerates task completion but also enhances customer service and response times.

Error Elimination: Human errors can be costly in terms of both time and money. RPA reduces the risk of errors to near zero. Bots follow predefined rules meticulously, resulting in accurate and consistent outcomes. This not only saves resources but also enhances the quality of your operations.

Scalability: As your business grows, so does the volume of repetitive tasks. RPA scales effortlessly, accommodating increased workloads without the need for lengthy recruitment and training processes. It's a flexible solution that grows with your business, allowing you to adapt to changing demands seamlessly.

Rapid Task Execution: With RPA, tasks that would take hours or days to complete manually can be executed in seconds or minutes. This expedited task execution enables your business to respond quickly to customer needs, market shifts, and other time-sensitive factors.

Enhanced Employee Satisfaction: Imagine your employees no longer bogged down by mind-numbing, repetitive work. RPA liberates your workforce from the most tedious aspects of their jobs, leading to increased job satisfaction and the ability to focus on tasks that require human creativity, decision-making, and critical thinking.

Cost Savings: The Financial Benefits of RPA Implementation

In an era where cost efficiency is paramount for businesses of all sizes, Robotic Process Automation (RPA) stands out as a formidable ally. It's not just about automation for the sake of it; it's about achieving substantial financial benefits through intelligent, rule-based processes. In this article, we'll explore how RPA can translate into real cost savings for your organization.

Reduced Labor Costs: The most immediate and noticeable cost savings with RPA come from a decreased reliance on human labor for repetitive tasks. RPA bots work tirelessly, without breaks or holidays, and do not require salaries, benefits, or overtime pay. This not only reduces labor costs but also eliminates the need for temporary staff during peak workloads.

Error Minimization: Human errors can be costly, leading to rework, customer dissatisfaction, and sometimes even regulatory fines. RPA dramatically reduces the risk of errors, ensuring that tasks are executed with a high degree of accuracy. This, in turn, mitigates the costs associated with correcting mistakes.

Improved Efficiency: RPA streamlines processes, making them more efficient and faster. Tasks that once took hours or days to complete manually can be accomplished in a fraction of the time with RPA. This increased efficiency allows your workforce to focus on higher-value tasks and revenue-generating activities.

Scalability Without Additional Costs: As your business grows, you may need to handle more transactions or process more data. Scaling up with human employees can be expensive, involving recruitment, training, and office space costs. RPA, on the other hand, scales easily without incurring additional costs.

Reduced Operational Costs: RPA can optimize various operational costs. It can help in inventory management, supply chain optimization, and other processes, reducing costs associated with excess inventory, carrying costs, and logistics.

Energy and Resource Savings: Automation doesn't just save labor costs; it can also lead to reduced energy consumption. RPA bots run on servers and data centers, which can be more energy-efficient than maintaining large office spaces with numerous employees.

the financial benefits of RPA implementation are substantial. It's not just about cutting costs; it's about doing so while improving operational efficiency, reducing errors, and allowing your employees to focus on strategic, value-adding tasks. RPA isn't an expense; it's an investment that pays off by delivering significant cost savings and contributing to the overall financial health of your organization. It's time to embrace RPA as a key driver of fiscal prudence and financial success.

Error Reduction: Enhancing Accuracy Through Automation

In the world of business, accuracy is not a mere aspiration; it's a prerequisite for success. Mistakes can be costly, resulting in financial losses, customer dissatisfaction, and even reputational damage. Fortunately, automation, specifically Robotic Process Automation (RPA), has emerged as a powerful tool for enhancing accuracy by minimizing errors. In this article, we'll explore how RPA's precision transforms the operational landscape of businesses.

Perfect Consistency: RPA bots are meticulously programmed to follow predefined rules and instructions. They execute tasks with unwavering consistency, ensuring that the same standard is upheld for every transaction, every time. This perfect consistency is a stark contrast to human work, which can be influenced by factors like fatigue, distractions, or oversight.

Reduction in Human Error: Human errors, no matter how diligent the employees, are an inherent part of manual processes. RPA mitigates this risk by automating rule-based tasks. Whether it's data entry, order processing, or calculations, RPA eliminates the potential for common errors such as typos, miscalculations, and missed steps.

Elimination of Repetitive Mistakes: Over time, repetitive tasks can become mundane, leading to inattentiveness and a higher likelihood of errors. RPA doesn't suffer from such lapses in attention. It tirelessly performs tasks with precision, without being influenced by factors that can lead to mistakes.

Error Monitoring and Reporting: RPA systems are equipped with error monitoring and reporting capabilities. If an issue arises, the system can detect it quickly and often correct it automatically. In cases where human intervention is required, the error is flagged for attention, reducing the chances of unnoticed errors that can compound over time.

24/7 Operations: The Advantage of RPA in Workflow Continuity

In a globalized and interconnected world, business operations are expected to run seamlessly around the clock. However, the human workforce has its limitations, including the need for rest and downtime. This is where Robotic Process Automation (RPA) steps in, offering a substantial advantage in workflow continuity through its ability to operate 24/7. In this article, we will delve into how RPA empowers businesses to break free from the constraints of traditional working hours.

Non-Stop Productivity: One of the most compelling advantages of RPA is its capacity for non-stop, 24/7 operations. RPA bots are not bound by the constraints of the human workday, allowing tasks to be executed continuously, even during evenings, weekends, and holidays. This round-the-clock productivity enhances the efficiency of critical business processes.

Reduced Response Times: With 24/7 RPA, customer inquiries, orders, and requests can be addressed instantly. This reduction in response times not only enhances customer satisfaction but can also give your business a competitive edge in industries where responsiveness is crucial.

No Overtime or Shift Work: Employing human workers for continuous operations typically involves overtime pay, shift differentials, and the associated costs of additional personnel management. RPA eliminates these costs while maintaining consistent, uninterrupted operations.

High Availability: RPA systems are designed for high availability. They can be configured to run on redundant servers or in the cloud, ensuring that operations continue even in the event of technical failures. This minimizes downtime and ensures uninterrupted workflow.

Enhanced Operational Efficiency: 24/7 RPA doesn't just mean working more hours; it means working more efficiently. Tasks are executed consistently and without the fluctuations in performance that can occur during late shifts or overnight hours.

The advantage of RPA in enabling 24/7 operations is a transformative element in the modern business landscape. It ensures that critical processes continue seamlessly, improves responsiveness, and reduces the costs associated with shift work and downtime. RPA's ability to work tirelessly and without interruption is a crucial factor in maintaining workflow continuity and meeting the demands of a 24/7 global economy.

Scalability: Adapting Your Business to Changing Workloads with RPA

In the dynamic and ever-evolving world of business, the ability to adapt to changing workloads is paramount for success. Robotic Process Automation (RPA) emerges as a pivotal solution, offering businesses the flexibility to scale their operations efficiently. In this article, we'll explore how RPA empowers organizations to seamlessly adjust to fluctuating demands, ensuring agility and sustained growth.

Handling Workload Peaks: Workload fluctuations are a common challenge for businesses. Seasonal spikes, promotions, or unforeseen events can cause a sudden surge in operational demands. RPA's scalability enables organizations to effortlessly address these peaks without the need for extensive human resource adjustments.

Speed and Accuracy: RPA bots can handle tasks with exceptional speed and accuracy. This not only ensures that tasks are completed on time during high-demand periods but also minimizes the risk of errors, contributing to a smoother scaling process.

Continuous Operation: RPA operates 24/7, providing continuous support for scaling efforts. Whether your business operates in multiple time zones or faces constant demand, RPA ensures that the scalability process can be ongoing and uninterrupted.

Improved Resource Allocation: The scalability offered by RPA allows human employees to focus on tasks that require creativity, decision-making, and critical thinking. This improved resource allocation not only enhances the quality of work but also promotes employee job satisfaction.

Rapid Deployment: Deploying additional RPA bots or reconfiguring existing ones can be achieved quickly. This agility is particularly valuable when responding to unexpected changes in workload, such as market fluctuations or emerging business opportunities.

Scalability Planning: RPA's analytics and data-driven insights can assist in proactive scalability planning. By analyzing historical data, businesses can anticipate workload fluctuations and adjust their RPA deployments accordingly.

The scalability that RPA offers is a strategic asset for businesses looking to adapt to changing workloads and seize growth opportunities. Whether you're aiming to respond to seasonal variations, sudden market shifts, or simply improve the efficiency of your daily operations, RPA provides a scalable solution that optimizes your resources and ensures that your business can remain agile and competitive in an ever-changing business landscape.

Employee Satisfaction: Empowering Workers with RPA

In the quest for business success, employee satisfaction is a critical factor that should never be underestimated. Satisfied and empowered employees are more productive, creative, and loyal to their organizations. Robotic Process Automation (RPA) plays a vital role in achieving these goals by relieving employees of mundane, repetitive tasks and giving them the opportunity to focus on higher-value, more fulfilling work. In this article, we'll explore how RPA empowers workers, leading to greater job satisfaction and overall success.

Skill Development: Employees empowered by RPA have the opportunity to develop new skills. As they transition to more complex, strategic roles, they can acquire valuable competencies that benefit both their personal growth and the organization.

Increased Job Satisfaction: By eliminating the least satisfying aspects of a job, RPA contributes to higher job satisfaction. Employees who find their work engaging and fulfilling are more likely to be committed to their roles and remain with the company for the long term.

Work-Life Balance: RPA's ability to handle tasks 24/7 ensures that employees are not burdened with late-night or weekend work to meet deadlines. This supports a healthier work-life balance, reducing stress and enhancing overall well-being.

Employee Empowerment: Employees often feel more empowered and valued when their employers invest in technologies like RPA to improve the work environment. This empowerment can boost morale and motivation.

Fostering Innovation: Employees freed from routine tasks can focus on more innovative and creative work. This not only benefits the company by driving innovation but also leads to a more fulfilling work experience for employees.

Consistency and Quality: RPA's ability to perform tasks with a high degree of accuracy ensures that employees can rely on consistent and error-free results in their work. This consistency reduces frustration and the need for rework.

Employee Feedback: RPA can be used to gather and process employee feedback more efficiently. By automating the data collection and analysis, it allows management to respond more effectively to concerns and suggestions, further boosting employee satisfaction.

RPA is a powerful tool for empowering employees and enhancing their job satisfaction. By automating repetitive tasks, it frees up time for more meaningful and challenging work, allows for skill development, and fosters a positive work environment. This not only benefits individual employees but also contributes to the overall success and competitiveness of the organization. It's a win-win scenario where both employees and the business thrive.

Data Insights: Leveraging RPA for Improved Decision-Making

In today's data-driven business landscape, making informed decisions is paramount for success. Fortunately, Robotic Process Automation (RPA) goes beyond task automation – it's a powerful tool for unlocking valuable data insights that can transform the way organizations make decisions. In this article, we'll explore how RPA can be harnessed to collect, process, and leverage data for more informed and strategic decision-making.

Data Collection and Aggregation: RPA can collect and aggregate data from various sources, including databases, spreadsheets, and online platforms. By automating the data collection process, it ensures data integrity and reduces the risk of human errors.

Real-Time Data Processing: RPA can process data in real-time, providing up-to-the-minute information that's crucial for making timely decisions. This real-time processing is especially valuable in fast-paced industries and competitive markets.

Data Cleansing and Quality Assurance: RPA can be programmed to cleanse and validate data, ensuring that it's accurate and reliable. This step is essential for high-quality decision-making, as inaccurate data can lead to poor judgments.

Predictive Analytics: RPA can be combined with predictive analytics models to forecast trends, identify potential issues, and recommend actions. This empowers decision-makers to proactively address challenges and seize opportunities.

Customized Reports and Dashboards: RPA can generate customized reports and dashboards that present data in a clear and actionable format. Decision-makers can quickly access the information they need, facilitating faster and more informed choices.

Exception Handling: RPA can identify exceptions or anomalies in data. When exceptions occur, RPA can alert decision-makers, enabling them to investigate and respond promptly to issues.

Compliance and Audit Trail: RPA ensures that tasks are executed according to predefined rules, creating a robust audit trail. This audit trail is invaluable for compliance with regulatory requirements and is a valuable resource for decision-making during audits.

RPA is not just about automating tasks; it's a key enabler of data-driven decision-making. By collecting, processing, and providing valuable data insights, RPA equips organizations to make informed, strategic decisions that can drive growth, efficiency, and competitive advantage. The ability to harness the power of data is a transformative asset in the modern business world, and RPA plays a central role in unlocking its potential.

Customer Experience Improvement: RPA's Impact on Service Quality

In an era where customer satisfaction can make or break a business, delivering an exceptional customer experience is a top priority. Robotic Process Automation (RPA) has emerged as a powerful tool for improving service quality and ensuring a seamless, satisfying customer journey. In this article, we'll explore how RPA positively impacts the customer experience and contributes to the success of businesses.

Faster Response Times: RPA can respond to customer inquiries and requests in real-time. This immediate response ensures that customers don't have to wait, enhancing their perception of your service quality.

Error Reduction: RPA minimizes errors in tasks such as order processing and data entry. Fewer mistakes mean fewer issues for customers to deal with, resulting in a smoother, more reliable experience.

24/7 Availability: RPA operates around the clock, ensuring that customers can interact with your business at any time. This high availability caters to diverse schedules and time zones, providing a more customer-centric experience.

Enhanced Data Security: RPA's robust security measures protect customer data and sensitive information. By safeguarding their data, you build trust and confidence, further improving the customer experience.

Proactive Issue Resolution: RPA can monitor systems for issues and exceptions in real-time. When issues arise, RPA can alert human staff to take corrective action promptly, minimizing customer disruption.

RPA is a game-changer in improving the customer experience. By reducing response times, minimizing errors, ensuring availability, and enhancing data security, RPA contributes to a higher level of customer satisfaction. Businesses that leverage RPA for service quality enhancements not only retain loyal customers but also gain a competitive edge in an environment where customer experience is a defining factor in success. RPA isn't just about efficiency; it's about enhancing the human touch in customer service and ensuring that customers receive the best service possible.

Compliance and Audit Readiness: Meeting Regulatory Requirements with RPA

In today's highly regulated business environment, compliance with industry-specific and government-mandated regulations is a non-negotiable aspect of operation. The failure to meet regulatory requirements can lead to significant legal consequences, fines, and damage to a company's reputation. Robotic Process Automation (RPA) offers a powerful solution for ensuring compliance and audit readiness. In this article, we'll explore how RPA can help businesses meet regulatory requirements and prepare for audits effectively.

Rule-Based Consistency: RPA excels at executing tasks following predefined rules and standards. This inherent consistency ensures that business processes are executed in a compliant manner every time.

Automated Data Logging: RPA can automatically record and log all actions taken during its processes. This comprehensive data logging provides a transparent audit trail, ensuring that regulators and auditors can easily review and verify compliance.

Real-Time Monitoring: RPA systems can monitor processes in real-time, identifying and rectifying deviations from compliance standards as they occur. This proactive approach minimizes the risk of non-compliance issues going unnoticed.

Data Security and Privacy: RPA is designed to protect sensitive data. By automating data handling and storage, it reduces the risk of data breaches and ensures that personally identifiable information (PII) and other sensitive data are handled in a compliant manner.

Regulatory Reporting: RPA can automate the collection and preparation of reports required for regulatory compliance. This not only reduces the time and effort required for reporting but also minimizes the risk of errors in these critical documents.

Controlled Access: RPA allows for controlled access to sensitive systems and data. By limiting access to authorized personnel and automating role-based permissions, it enhances security and compliance with access control regulations.

Reducing Human Error: Many compliance issues arise from human error. RPA minimizes these errors, which can lead to non-compliance, fines, and other penalties.

RPA is a vital tool for meeting regulatory requirements and achieving audit readiness. It not only protects businesses from legal consequences and fines but also allows them to focus on their core operations, knowing that they are operating within the boundaries of the law. RPA doesn't just streamline processes; it safeguards businesses and enhances their ability to navigate complex regulatory landscapes with confidence.

Competitive Advantage: Staying Ahead in Your Industry with RPA

In the fast-paced and ever-evolving world of business, staying ahead of the competition is a constant challenge. To gain a competitive edge, organizations must be agile, efficient, and innovative. Robotic Process Automation (RPA) offers a significant advantage by providing the tools to streamline operations, reduce costs, and make data-driven decisions. In this article, we'll explore how RPA can be a catalyst for achieving a competitive advantage in your industry.

Operational Efficiency: RPA optimizes business processes by automating repetitive and rule-based tasks. This increased efficiency enables your organization to deliver products and services more quickly and cost-effectively, giving you an edge in the market.

Cost Reduction: By automating tasks and minimizing errors, RPA reduces operational costs. This allows your business to allocate resources strategically, invest in growth, and potentially offer competitive pricing to customers.

Customer Satisfaction: RPA enhances the customer experience by ensuring faster response times, personalized interactions, and error-free service. Satisfied customers are more likely to remain loyal and recommend your business to others.

Real-Time Data Insights: RPA collects and processes data in real-time, providing valuable insights into market trends, customer behavior, and operational performance. These insights can be leveraged to make informed decisions and stay ahead of market shifts.

Innovation and Creativity: By automating routine tasks, RPA liberates your workforce from the mundane, allowing them to focus on creative, strategic, and value-added activities. This fosters a culture of innovation and provides a competitive advantage in product and service development.

Strategic Decision-Making: With RPA, your organization can make data-driven decisions more efficiently. This strategic approach to decision-making allows you to anticipate market trends, spot opportunities, and outmaneuver competitors.

RPA is a game-changer for businesses seeking to gain a competitive advantage in their industries. By increasing operational efficiency, reducing costs, enhancing customer satisfaction, and providing real-time insights, RPA empowers organizations to stay ahead in rapidly changing markets. It's not just about streamlining processes; it's about positioning your business to lead, innovate, and thrive in a highly competitive world. RPA is the key to not just keeping pace but setting the pace in your industry.

Conclusion

In conclusion, Robotic Process Automation (RPA) is a transformative force in the modern business landscape, offering a multitude of benefits that can revolutionize the way organizations operate. Throughout this series of articles, we've explored the diverse advantages of RPA and how it can be harnessed to enhance different aspects of business operations.

RPA's ability to operate 24/7 ensures workflow continuity, allowing businesses to adapt to fluctuating workloads, meet global demands, and maintain a competitive edge. It offers employees the opportunity to focus on more meaningful tasks, fostering job satisfaction, skill development, and innovation.

RPA leverages data insights to facilitate data-driven decision-making, enabling businesses to respond to market changes and opportunities with agility and precision. It improves the customer experience through faster response times, reduced errors, and personalized interactions, leading to higher customer satisfaction and loyalty.

In a world where efficiency, agility, and customer satisfaction are paramount, RPA is more than a tool for automation; it's a strategic asset that empowers businesses to thrive and excel. Embracing the automation revolution is not just a choice; it's a necessity for organizations that aim to remain competitive, innovative, and successful in the ever-evolving business landscape.

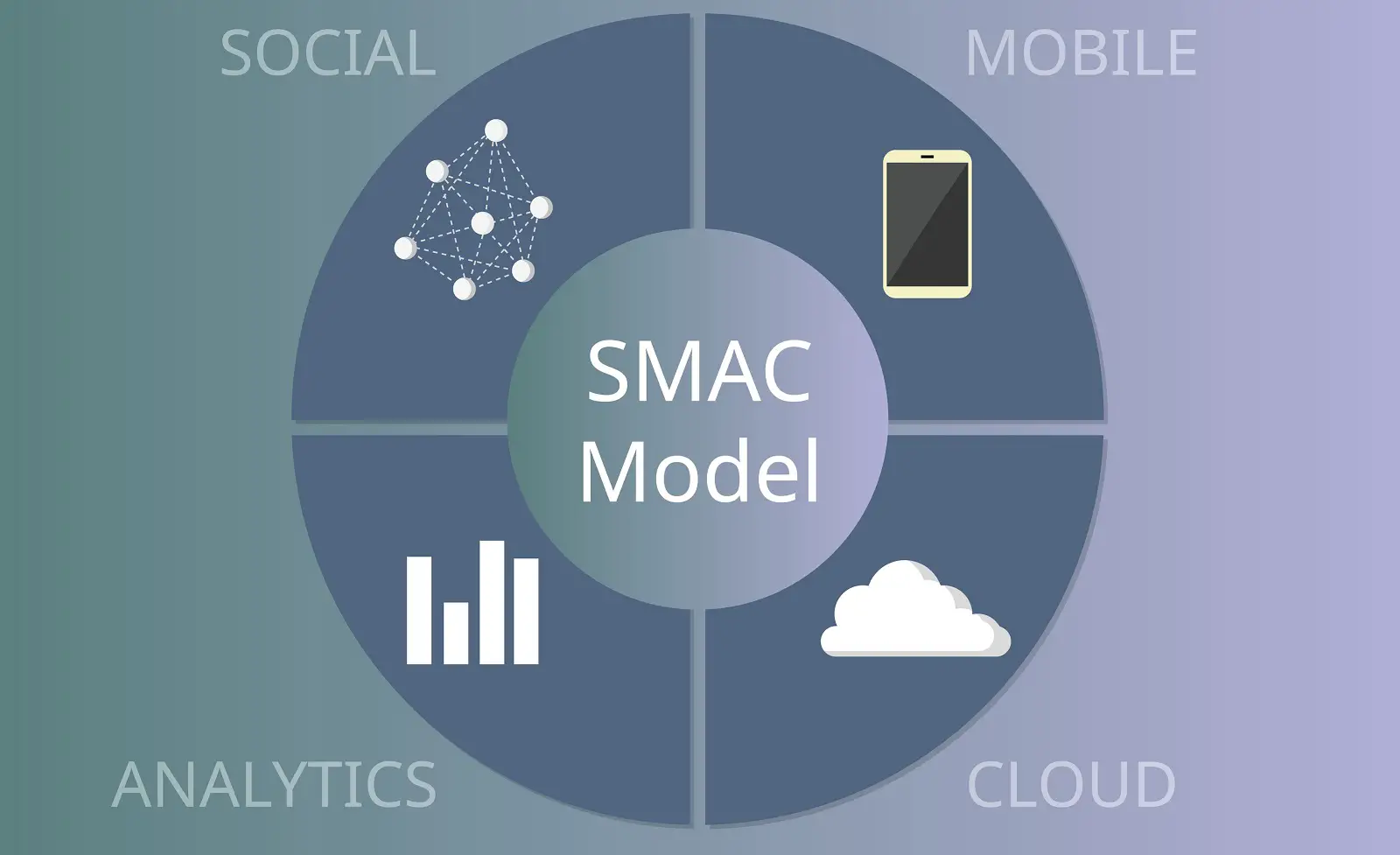

Understanding the Power of SMAC: Social, Mobile,Analytics, and Cloud

In the rapidly evolving landscape of modern technology, the convergence of Social, Mobile, Analytics, and Cloud (SMAC) has emerged as a powerful force that is reshaping industries, revolutionizing customer experiences, and driving innovation at an unprecedented pace. This dynamic quartet of technological trends, when combined effectively, can offer organizations a competitive edge, improved efficiency, and fresh opportunities for growth. Understanding the power of SMAC is not just a technological endeavor; it's a strategic imperative for businesses in the 21st century.

In this exploration of SMAC, we will delve deeper into each of these components, uncovering their individual significance and examining the powerful synergies that emerge when they are combined. We will also explore the impact of SMAC across various industries and sectors, from healthcare and finance to manufacturing and marketing, showcasing how this transformative technology is redefining the way businesses operate and the way we experience the world.

Join us on this journey as we unravel the intricate web of SMAC, and discover how this fusion of technology is not just a trend but a transformative force with the potential to shape the future of business and society.

Table of contents

-

The Core Components of SMAC

-

SMAC's Impact on Customer Engagement

-

Data Analytics in SMAC

-

Mobile-First Strategies in SMAC

-

The Social Media Factor

-

Cloud Computing's Role in SMAC

-

SMAC in Healthcare

-

The Security Challenges of SMAC

-

SMAC in Financial Services

-

Real-World SMAC Success Stories

-

Conclusion

The Core Components of SMAC

The core components of SMAC (Social, Mobile, Analytics, and Cloud) are the fundamental building blocks that make up this powerful technology framework. Understanding each component is essential for grasping the full potential of SMAC and how they interact synergistically. Let's take a closer look at each component:

Social (S):The "Social" component refers to the vast and interconnected world of social media. Social platforms such as Facebook, Twitter, Instagram, LinkedIn, and others have become integral parts of our personal and professional lives. They serve as channels for communication, collaboration, and information sharing. But beyond their social aspects, they are also a treasure trove of valuable data. Businesses can leverage social media to gain insights into customer preferences, sentiments, and behaviors. This data can inform marketing strategies, product development, and customer engagement.

Mobile (M):The "Mobile" component represents the proliferation of mobile devices, primarily smartphones and tablets. Mobile technology has transformed how people interact with digital content and services. With mobile devices, individuals have constant access to information, and businesses have the ability to engage with customers wherever they are. Mobile applications, or apps, have become central to delivering services, conducting transactions, and gathering real-time data. Mobile-friendly websites and apps are now essential for businesses to reach and connect with their audiences.

Analytics (A):"Analytics" is the data-driven heart of SMAC. It involves the collection, processing, and interpretation of data to gain insights and make informed decisions. Advanced analytics tools and techniques, including data mining, machine learning, and predictive analytics, help businesses identify trends, patterns, and correlations in their data. By harnessing analytics, organizations can make smarter decisions, optimize operations, personalize customer experiences, and even predict future outcomes. Big data analytics, in particular, enables the handling of vast amounts of data to extract meaningful information.

Cloud (C):The "Cloud" component represents cloud computing technology. Cloud computing offers a scalable and flexible infrastructure for storing and processing data and applications. It allows businesses to access resources remotely, reducing the need for on-site hardware and infrastructure maintenance. Cloud services provide a cost-effective solution for storing and managing data, running applications, and supporting various SMAC technologies. This scalability and accessibility are crucial for handling the vast amounts of data generated by social media, mobile devices, and analytics.

These core components of SMAC are interdependent, and their synergy enhances an organization's ability to engage with customers, extract valuable insights from data, and operate efficiently and effectively in the digital age Understanding how these components work together is essential for organizations looking to harness the full power of SMAC for their benefit.

SMAC's Impact on Customer Engagement

SMAC (Social, Mobile, Analytics, and Cloud) technologies have had a profound impact on customer engagement, revolutionizing the way businesses interact with and serve their customers. The convergence of these four components has created new opportunities for businesses to better understand, connect with, and delight their customers. Here's an exploration of SMAC's impact on customer engagement:

Real-Time Communication: Mobile and social media enable real-time communication with customers. Businesses can engage with customers instantly, addressing questions or concerns promptly. This level of responsiveness fosters trust and a sense of being heard, which is crucial for positive customer experiences.

Omni-Channel Customer Service: Cloud technology plays a significant role in creating an omni-channel customer service experience. It allows businesses to integrate customer data across various touchpoints and provide a seamless experience. For example, a customer can start a conversation with a business on social media and then continue it via a mobile app, with the context of the conversation maintained.

Feedback and Surveys: Social media and mobile apps provide opportunities for businesses to collect customer feedback and conduct surveys. This real-time feedback loop allows companies to make quick improvements and adjustments to products or services.

Customer Communities: Social media can be used to create customer communities where users can discuss products, share tips, and support one another. These communities foster a sense of belonging and loyalty among customers.

SMAC technologies have transformed customer engagement by providing businesses with the tools to collect and analyze data, personalize experiences, and engage with customers across multiple channels in real-time. This shift towards a more customer-centric approach is a critical element of successful modern business strategies, enabling companies to build stronger relationships with their customers and stay competitive in an increasingly digital marketplace.

Data Analytics in SMAC

Data analytics plays a central role in the SMAC (Social, Mobile, Analytics, and Cloud) framework, and it's a key component for harnessing the power of these technologies. Here's an exploration of the role and importance of data analytics in the SMAC ecosystem:

Data Collection: Data analytics in SMAC begins with the collection of vast amounts of data. Social media, mobile applications, and websites generate a wealth of information. Analytics tools collect and aggregate this data from various sources.

Data Storage: Cloud computing is essential for storing the large volumes of data generated by SMAC components. The cloud offers scalable, cost-effective storage solutions, ensuring that data is readily accessible and secure.

Data Processing: Analytics tools process the data to make it meaningful and actionable. This includes cleaning and transforming raw data into structured information. Mobile and cloud technologies facilitate this processing by providing the computing power required for complex data operations.

Real-Time Analytics: Real-time analytics, made possible by mobile and cloud technologies, allows businesses to analyze data as it's generated. This is particularly crucial for immediate decision-making and personalized customer experiences.

A/B Testing: Mobile apps and websites enable A/B testing, where businesses can experiment with different versions of products, services, or marketing content to see which performs better. Data analytics measures the effectiveness of these tests.

In summary, data analytics is at the heart of SMAC, providing businesses with the ability to collect, process, analyze, and make data-driven decisions. This data-driven approach is pivotal for personalizing customer experiences, optimizing operations, and staying competitive in the digital age. The integration of data analytics within SMAC technologies empowers organizations to unlock valuable insights and leverage them to enhance their products, services, and customer engagement strategies.

Mobile-First Strategies in SMAC

Mobile-First strategies in the context of SMAC (Social, Mobile, Analytics, and Cloud) are approaches that prioritize the mobile experience as the central focus of digital initiatives. With the increasing use of mobile devices, including smartphones and tablets, businesses are recognizing the need to adapt and optimize their strategies to cater to the mobile-savvy audience. Here's an exploration of the concept of Mobile-First strategies within the SMAC framework:

Mobile-Centric Design: Mobile-First strategies begin with designing digital platforms, such as websites and applications, with mobile users in mind. This means starting the design process with mobile devices as the primary target, ensuring that the user experience is seamless and efficient on smaller screens.

Mobile App Development: Creating mobile apps tailored to the needs and behaviors of mobile users is a significant aspect of Mobile-First strategies. These apps offer a more streamlined and engaging experience compared to mobile-responsive websites.

User Experience Optimization: Mobile-First strategies prioritize optimizing the user experience on mobile devices. This includes fast loading times, intuitive navigation, and user-friendly interfaces that cater to touch and swipe interactions.

Mobile SEO: Search engine optimization (SEO) techniques are adapted to cater to mobile search trends, as more people use mobile devices to access the internet. Mobile-First strategies involve optimizing websites and apps for mobile search.

Mobile Marketing: Mobile-First strategies extend to marketing efforts. Businesses create mobile-friendly marketing campaigns, such as SMS marketing, mobile advertising, and social media campaigns designed for mobile users.

Mobile Analytics: Mobile-First strategies rely on analytics to understand mobile user behavior. By analyzing data from mobile users, businesses can make informed decisions about how to improve their mobile offerings.

Location-Based Services: Mobile-First strategies take advantage of location-based services to offer users localized and context-aware content. For example, businesses can send mobile app users offers or recommendations based on their current location.

Mobile Security: The security of mobile apps and websites is a critical consideration in Mobile-First strategies. Protecting user data and ensuring secure mobile transactions are top priorities.

Mobile-First strategies are a response to the increasing dominance of mobile devices in the digital landscape. They require businesses to prioritize mobile users in design, content, marketing, and technology decisions.These strategies complement and enhance the broader SMAC framework by recognizing the pivotal role of mobile technology in customer engagement and digital transformation.

The Social Media Factor

The "Social Media Factor" within the SMAC (Social, Mobile, Analytics, and Cloud) framework is a crucial component that plays a pivotal role in how businesses engage with customers and gather valuable insights. Social media platforms have transformed the way companies interact with their audiences, build brand presence, and gather information about customer behavior. Here's a closer look at the social media factor and its significance within the SMAC framework:

Customer Engagement: Social media platforms are powerful tools for engaging with customers in real time. Businesses can respond to customer inquiries, address concerns, and provide support promptly. This direct engagement fosters trust and loyalty.

User-Generated Content: Social media encourages users to generate content, such as reviews, photos, and testimonials. User-generated content serves as social proof, influencing the purchasing decisions of other consumers.

Influencer Marketing: Social media allows businesses to partner with influencers who have a substantial following. Influencers can promote products and services to their engaged audience, providing a more authentic and trusted recommendation.

Community Building: Brands can create online communities on social media where customers can connect, discuss, and share their experiences. These communities foster a sense of belonging and loyalty.

Crisis Management: Social media is often the first place where crises or issues are brought to light. Businesses can use these platforms to manage and address public relations challenges promptly and transparently.

Global Reach: Social media transcends geographical boundaries, enabling businesses to engage with a global audience. This is especially beneficial for businesses with international markets.

The "Social Media Factor" is a pivotal element within the SMAC framework, transforming how businesses interact with customers, build brand presence, and gather insights. It amplifies the reach and impact of businesses by harnessing the connectivity and engagement opportunities offered by social media platforms. Understanding and leveraging this factor is critical for businesses seeking to thrive in the digital age and harness the power of SMAC for customer engagement and brand success.

Cloud Computing's Role in SMAC

Cloud computing plays a critical role in the SMAC (Social, Mobile, Analytics, and Cloud) framework, as it provides the underlying infrastructure and technology foundation that enables the other components to function effectively. Here's an exploration of the role and importance of cloud computing in the SMAC ecosystem:

Scalability: Cloud computing offers on-demand scalability, allowing businesses to scale up or down their resources as needed. This is particularly important in the context of SMAC, as data volumes and user demands can fluctuate rapidly.

Flexibility: The cloud provides a flexible environment for deploying and managing SMAC applications and services. This flexibility is crucial for adapting to changing business needs and technological advancements.

Cost Efficiency: Cloud computing eliminates the need for extensive upfront infrastructure investments. It allows businesses to pay for the resources they use, reducing capital expenditures and operational costs.

Accessibility: The cloud enables remote access to data, applications, and services from virtually anywhere with an internet connection. This accessibility is vital for mobile users and remote teams, supporting the mobile and social components of SMAC.

Data Storage: Cloud storage services provide a secure and cost-effective way to store vast amounts of data generated by the social and analytics components of SMAC. This data can be easily accessed and processed as needed.

Data Analytics and Processing: Cloud platforms offer powerful computing capabilities that are essential for processing and analyzing large datasets. This is critical for extracting insights from the analytics component of SMAC.

Collaboration: The cloud facilitates collaboration among teams, enabling them to work on SMAC projects and data analysis from various locations. Collaboration tools and shared resources in the cloud promote efficient teamwork.

API Integration: Cloud platforms often support APIs (Application Programming Interfaces) that enable seamless integration with various third-party applications and services. This is valuable for connecting different SMAC components and tools.

SMAC in Healthcare

SMAC (Social, Mobile, Analytics, and Cloud) technologies have had a profound impact on the healthcare industry, revolutionizing the way healthcare is delivered, managed, and experienced. These technologies are driving improvements in patient care, data analysis, accessibility, and overall efficiency. Here's an overview of how SMAC is applied in healthcare:

Mobile Health (mHealth): Mobile apps and devices have transformed healthcare delivery. Patients can use mobile apps to schedule appointments, access medical records, receive medication reminders, and monitor their health conditions. Wearable devices track vital signs and send data to healthcare providers in real time.

Telemedicine: Mobile and cloud technologies enable telemedicine, which allows patients to have virtual consultations with healthcare professionals. This has improved access to medical care, especially in remote or underserved areas.

Electronic Health Records (EHRs): Cloud computing is central to the storage and management of electronic health records. EHRs provide a secure, centralized, and easily accessible repository of patient data for healthcare providers. Analytics tools can mine this data for insights.

Health Data Analytics: Analytics tools help healthcare providers process and analyze vast amounts of health data. They can identify trends, track disease outbreaks, predict patient outcomes, and improve treatment decisions.

Medical Imaging and Analytics: Cloud technology enables the storage and sharing of medical images such as X-rays, MRIs, and CT scans. Analytics tools help in image analysis for faster and more accurate diagnoses.

Drug and Treatment Research: Analytics and cloud computing assist in drug discovery and clinical trials. Researchers can analyze patient data, share information securely, and accelerate the development of new drugs and treatments.

SMAC technologies have ushered in a new era of healthcare, promoting patient-centered care, improving data accessibility, enhancing diagnostic capabilities, and streamlining healthcare operations. They have the potential to improve patient outcomes, reduce costs, and make healthcare more accessible to individuals around the world. As technology continues to advance, the healthcare industry must adapt and innovate to harness the full potential of SMAC.

The Security Challenges of SMAC

The security challenges associated with SMAC (Social, Mobile, Analytics, and Cloud) technologies are a critical concern for businesses and organizations. The integration of these technologies introduces new vulnerabilities and risks that need to be addressed to protect sensitive data and ensure the integrity and privacy of digital interactions. Here are some of the key security challenges associated with SMAC:

Mobile Device Security:

Challenge: Mobile devices are easily lost or stolen, making them a significant security risk. The use of personal mobile devices for work (BYOD) can blur the lines between personal and professional data.

Solution: Implement mobile device management (MDM) solutions to enforce security policies, remote wipe capabilities, and containerization to separate work and personal data on devices.

Data Integration and Governance:

Challenge: Integrating data from various sources for analytics can lead to data quality and governance issues. Inaccurate or incomplete data can impact the accuracy of insights and decision-making.

Solution: Establish data governance policies, data quality checks, and data cleansing processes to ensure the integrity of data used for analytics.

Mobile App Security:

Challenge: Mobile apps may contain vulnerabilities that can be exploited by attackers. These vulnerabilities could be related to insecure coding practices, weak authentication, or unpatched software libraries.

Solution: Regularly update and patch mobile apps, conduct security assessments, and use code analysis tools to identify and remediate vulnerabilities.

In summary, while SMAC technologies offer numerous benefits, they also introduce security challenges that require vigilant management and proactive measures. Organizations must adopt a holistic approach to cybersecurity, including technology, policies, employee training, and ongoing monitoring, to protect their data and digital assets effectively in the SMAC era.

SMAC in Financial Services

SMAC (Social, Mobile, Analytics, and Cloud) technologies have had a significant impact on the financial services industry, transforming the way financial institutions operate and how customers interact with their banks and investment firms. Here's an overview of how SMAC is applied in financial services:

Enhanced Customer Experience: Financial institutions use SMAC technologies to provide a more personalized and convenient experience for their customers. Mobile apps allow users to access accounts, transfer funds, and make payments on the go. Social media and chatbots provide quick customer support, while analytics help understand customer preferences and behavior for tailored offerings.

Mobile Banking and Payments: Mobile banking applications have become a staple in the financial services sector. Customers can check balances, make payments, and even deposit checks using their smartphones. Mobile wallets and contactless payments are on the rise, making transactions more convenient.